AI-powered, human enhanced! How to take feedback analytics to the next level

Can you trust AI when you don't understand the way it makes decisions? Or do you need control? Learn how AI and people can collaborate to get the best insights from feedback faster.

When you hear Artificial Intelligence, do you imagine a super smart computer algorithm?

You might be thinking of a human-like 'general AI', able to converse on any topic.

Or you might be like me: imagining a practical AI that performs a specific task faster and more accurately than a human. Let’s say it learns over time and becomes smarter. But it’s a black box, and only brainy data scientists can tweak it to improve performance.

This reminds me of my robot vacuum cleaner. My kids gave it a name: Cleano. It gets the job done. Most of the time it’s better than me. I know that it has a camera of sorts and it maps out my rooms. It can vacuum and mop at the same time, and I love it. I don’t need to know more about its inner workings.

But is it the same when it comes to AI analyzing feedback? Can you trust a black box approach? Or do you need control? Let’s dig into this topic and see some real examples of how AI and people can collaborate to get the best insights from feedback faster.

Why we need trust when it comes to AI-powered feedback analysis

Back in 2015, I was still consulting in the general area of AI. When I say AI, I mean Machine Learning and NLP algorithms used for automating various tasks. One app we designed automatically linked a wine label to a specific product. Another solution cleaned boilerplate text from emails.

My clients wanted specific solutions, and black box AI made sense. In both cases, there was room for error - as with my vacuum robot.

Then I came across customer feedback analysis. Here, businesses of all kinds needed to understand what customers (or employees) say at scale. I immediately thought of a variety of algorithms that could help. But instead of getting to work I started interviewing people who did this for a living. I asked them, if AI were to help here, what’s most important to you?

To my surprise, everyone said they needed trust!

After this initial research, we launched Thematic, a customer feedback solution that builds trust through transparency. I'll explain how shortly.

Building trust remains a priority for us. It was the number one discussion point at our recent customer advisory board session, five years since we first launched.

There are two main reasons why trust is key for customer feedback analysis:

1. Analyzing customer feedback isn’t a new requirement. Market researchers have been analyzing customer sentiment at scale for decades. They know what great looks like. But it’s a painful and time-consuming task they want to go away.

2. Unlike other AI use cases, in feedback analysis, the output is used to make important business decisions. So the research methodology must be solid. Sure, there might be occasional misses. People can also be biased when analyzing feedback. But overall, trust in accuracy is critical. Especially if you are reporting to people likely to question the research outcomes due to their own biases.

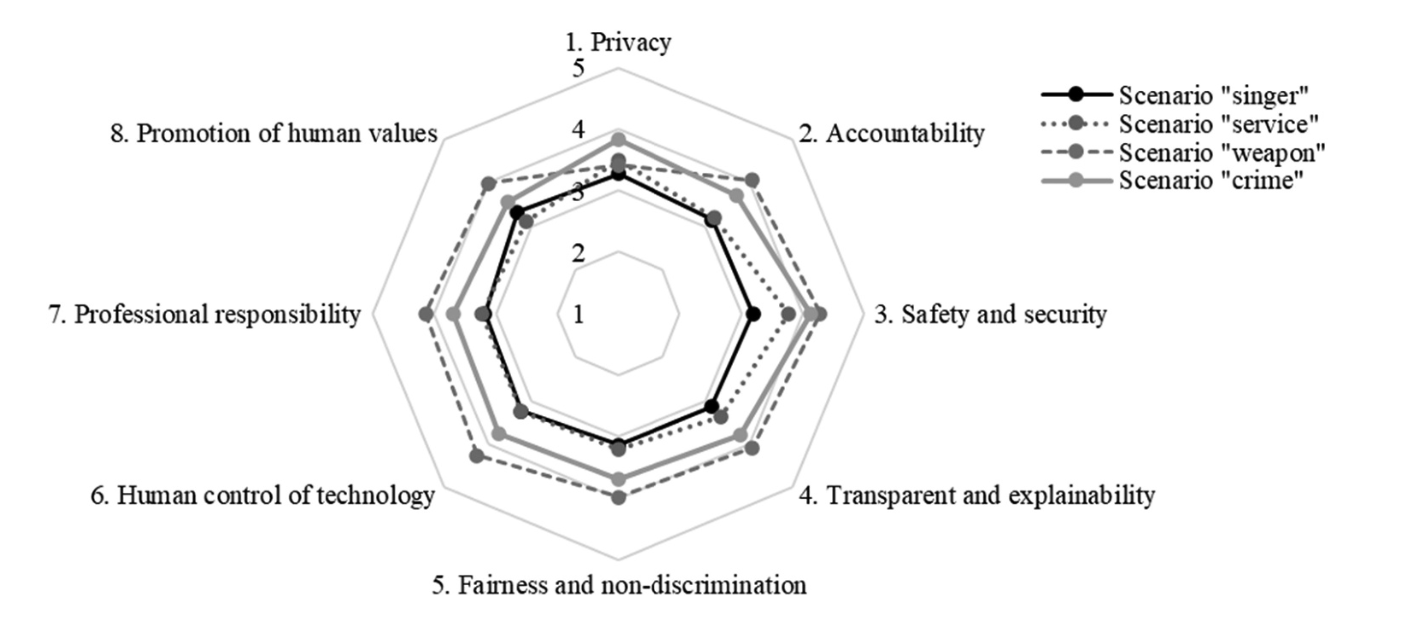

Japanese researchers at Tokyo University measured trust in AI depending on the application. They found that it's lowest when AI is trying to replicate the voice of a dead famous person, and highest when it operates unmanned weapons. No surprise here!

How AI algorithms make decisions

Customer feedback analysis is not the first application that needs trust in AI. There’s AI-powered health diagnostics. There’s AI-powered risk assessment in the financial sector. Here, experts verify the final decisions, and this gradually builds trust in AI.

Because it’s important for experts to understand why AI makes certain decisions, researchers developed the idea of Transparent AI, also called Explainable AI. Experts are presented with the critical data points that led to a decision. For example, part of an X-ray scan and how it’s similar to other scans.

To understand how AI makes decisions and why transparency matters, let’s look an example where AI failed. Researchers built a model that recognizes whether an image is that of a husky or a wolf. Hundreds of examples were fed to AI for training, and some for testing.

AI said the above picture is a wolf, but it's a husky. Advanced analysis of the model revealed why. The AI made this mistake because it focused on the background, instead of capturing the facial features of the animals. Wolves had snow in the background, whereas huskies had greenery. As you can see, knowing how AI makes a decision is critical to understand if it can be trusted.

Lack of trust in rule-based customer feedback analysis

Automated feedback analysis has been around for a decade or so. The first approach was based on rules. For example, if words like “expensive”, “cheap”, “costs” etc. were detected in feedback, feedback was categorized under the topic “pricing”.

Initially, there's full trust in this approach. You create the rules, you know what they are and what they cover. But then things break down:

1. Complexity. Soon you realize that complex Boolean rules are required to capture complexities of language. For example, to match “friendly service”, you need a rule like “friendly” AND “cheerful” AND NOT “user friendly”. Eventually a set of rules becomes large, possibly co-created by whole teams over many years. It becomes harder to retain trust.

2. Lack of discovery. To make sure rules pick up all emerging topics, you need to constantly monitor feedback. This is time consuming and often not done regularly. In situation where feedback changes often (e.g. with constantly evolving products or services), rules quickly become obsolete and trust erodes.

Despite these challenges, many feedback management solutions are still using this approach. This is because creating rules builds initial trust and a sense of control. Unfortunately, by the time you realize the trust cannot be maintained, many hours are already sunk into creating topics.

Lack of trust in supervised categorization

A common AI-powered approach to feedback analysis is supervised categorization. Here, an AI model is trained by feeding it examples of manually categorized pieces of feedback. A model trained on thousands of examples can be highly accurate.

The benefit of this approach is that you don’t need to think up rules. But just like with the husky vs. wolf example, we don’t really know how the AI captures a topic from the example. So, when the model makes a mistake, tweaking it becomes complicated. The whole idea of a black box is not helpful here.

In addition, this model can only pick up things it was trained on. So, just like the rules-based approach, it will miss any new emerging topics in the data. This also affects the trust.

Lack of trust in topic modeling

Insights teams know the importance of discovery in feedback. Picking up on emerging issues early can be critical for a business. So, data scientists often suggest the topic modeling approach, also called LDA.

How it works is that an AI model is created from feedback alone. No rules, no labeling required. Instead, the model captures how words are used alongside other words in text. It can then detect groups of words (topics) that capture specific aspects. For example, if you were to run it on beer reviews, you might find topics like (“toffee”, “burned sugar”, “malty”), (“coffee”, “chocolate”, “cacao”) and (“citrusy”, “fruity”, “grapefruit”). It’s quite a black box.

It is often effective for a one-off analysis of certain feedback. For example, a data science team once ran topic modeling on Facebook app reviews. They found an unexpected theme that drove the negative reviews: It was that Candy Crush Saga crashed the app! Not the usual topics like “user experience”, “installation” or “news feed” that product managers initially wanted to include in their set of rules or supervised topics.

But when research teams try to use this approach for monthly reporting, things start to break down. Topic modeling can't maintain the same set of topics for consistency AND also discover new topics.

Lack of trust in giant Deep Learning models

Recently, AI researchers turned to creating giant models like OpenAI’s GPT-3 and Google’s LaMBDA. Some of these have been applied to customer feedback.

These models are trained on huge datasets over several months. The energy requirements to train them are counted in millions of dollars. But once trained, the model can do a variety of impressive tasks: from solving math problems or conversing with you to creating poetry. A Google engineer working on LaMBDA was so convinced the AI is sentient that he helped it hire a lawyer to represent its interests!

Most AI researchers in and outside Google disagree that these giant models are sentient. That said, their fluency in language is impressive. You can load a set of data into GPT-3 and ask it to summarize what people are saying. GPT-3 will write a perfectly coherent summary, similar to those created by insights managers.

However, like other AI models, they often produce summaries that are off. If the 'creativity' parameter is not set correctly, the summary will include things that are not present in the data. Customers might say that the product is fun and engaging, but could have better design. But the AI model’s summary reports that customers want dark mode design, because that’s something it's often seen in the training data.

Another problem with such summaries is that they aren’t quantified. They don’t tell you how many people like or dislike something, or, for example, the overall impact of a given feature.

How to build trust in AI-powered feedback analysis

We’ve looked at a variety of approaches that automate feedback analysis: from transparent but hard to manage rules to huge AI black box models. Both have advantages and disadvantages.

Overall, there is a huge trend towards black box AI in the industry. Our language is simply too complex to be captured with transparent/explainable AI models. There is no way around using complex Deep Learning models, though not perhaps the largest ones.

So how do we build it in a way that conveys trust?

1. Break down the task into smaller pieces or stages. The smaller the stage, the more predictable and consistent results we can expect from AI.

2. Make it easy to enhance AI with human input. It’s easier for a user to provide input in stages. Ultimately, users should be able to tailor the analysis to make it more useful. But in a way that scales.

3. Provide a pathway to success. What does great look like? When it comes to feedback analysis, even people aren’t often sure if they 'picked up' everything. Use AI to compare feedback analysis results to those best-in-class. Provide suggestions on how to get there.

Let’s dig deeper into each of these.

Thematic Analysis: Breaking down feedback analysis into stages

What do you do when you work with a giant AI model, and it doesn’t give you the results you need? You're stuck! So why not divide and conquer? It has been a winning strategy for many, from imperialist rulers to creators of sorting algorithms.

Formula 1 teams improve the performance of their cars by optimizing each part: the tires, the body, the engine and sometimes the driver! I’m sure the R&D team at Roomba approaches this the same way. Similarly, we can get more out of AI-powered feedback analysis by breaking it into stages. Here’s how:

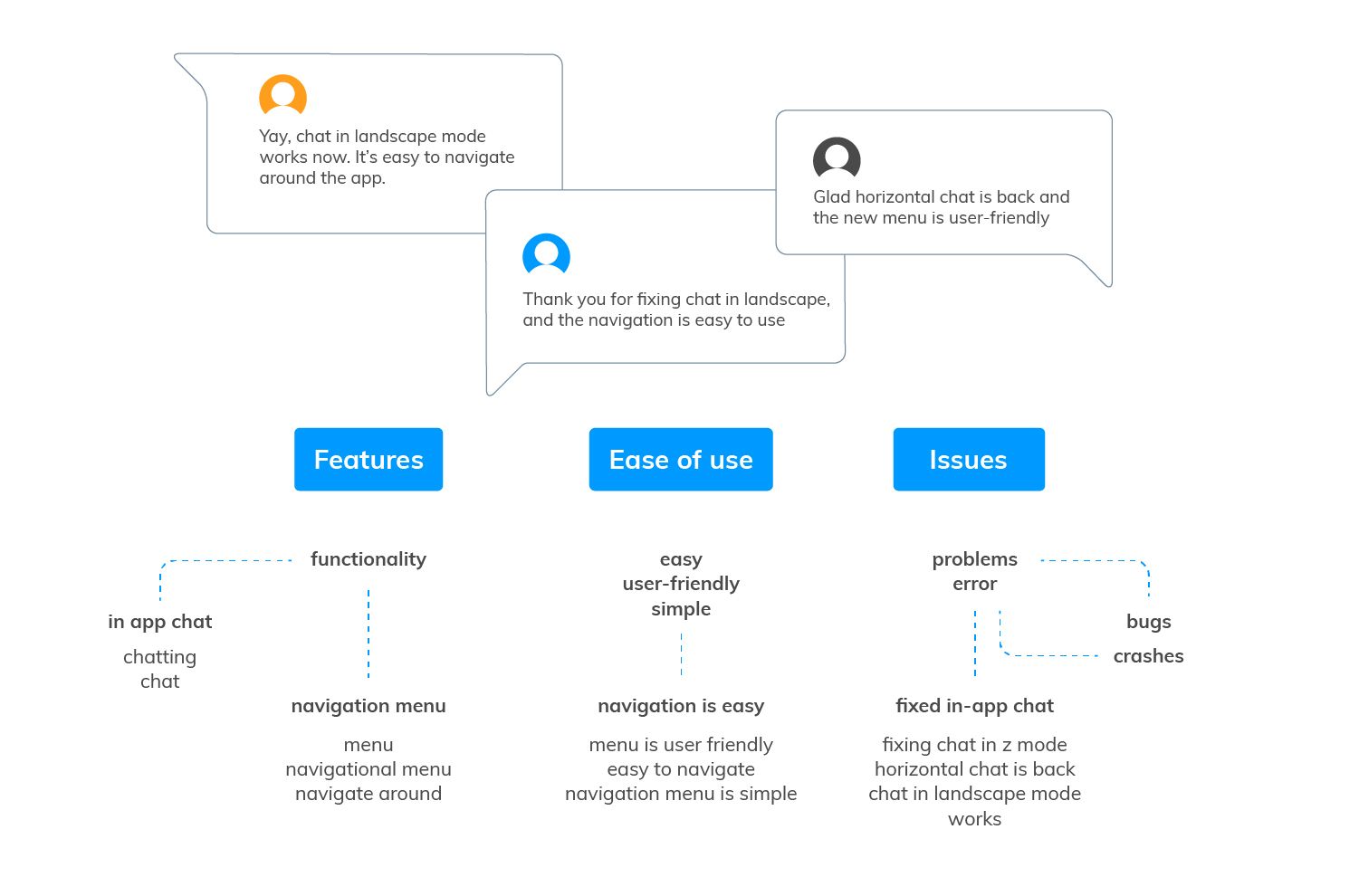

When it comes to analyzing feedback, you'll have heard of many different approaches. But ultimately, there are themes (also called topics, categories or tags) to describe what people are saying. These themes are organized into a hierarchy (also called a taxonomy or code frame) to make it easy to work with them.

Some people start with a set of themes, others start from scratch and let themes emerge from the data. In qualitative research (where you work with a lot of text data), this is called thematic analysis.

When companies gather feedback at scale, there is usually historic data which informs the analysis process, and new feedback that is continuously added to historical data.

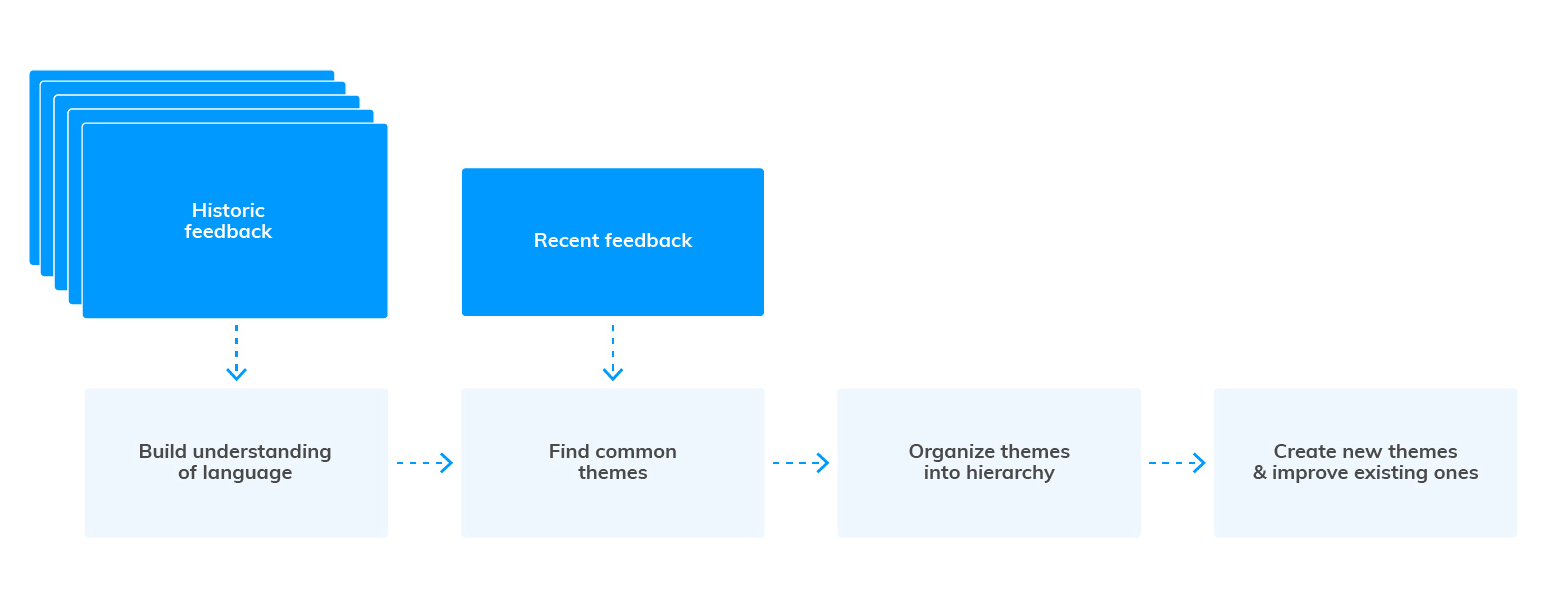

We can break down the analysis as follows:

AI can help in all of these stages.

Most importantly, this makes the analysis semi-transparent and more trusted. Maybe you don’t see what’s happening inside each box individually, but you can tune each separately by providing feedback.

Enhance AI-powered thematic analysis with human input

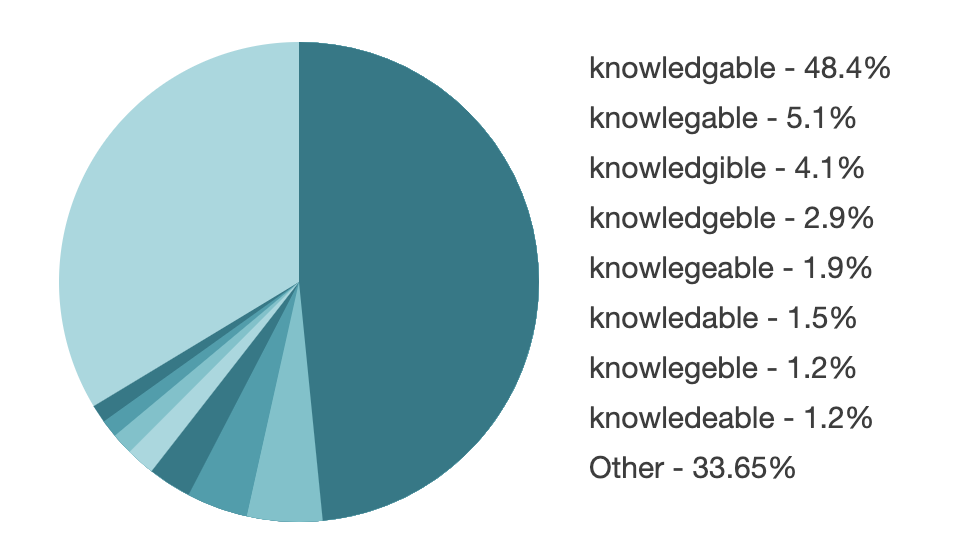

Building understanding of language in how people provide feedback and company-specific terminology. Some of this comes naturally to people, some to AI. For example, AI won’t know some of the product names, abbreviations etc, but it will know at least 10 ways people can misspell the word “knowledgeable”.

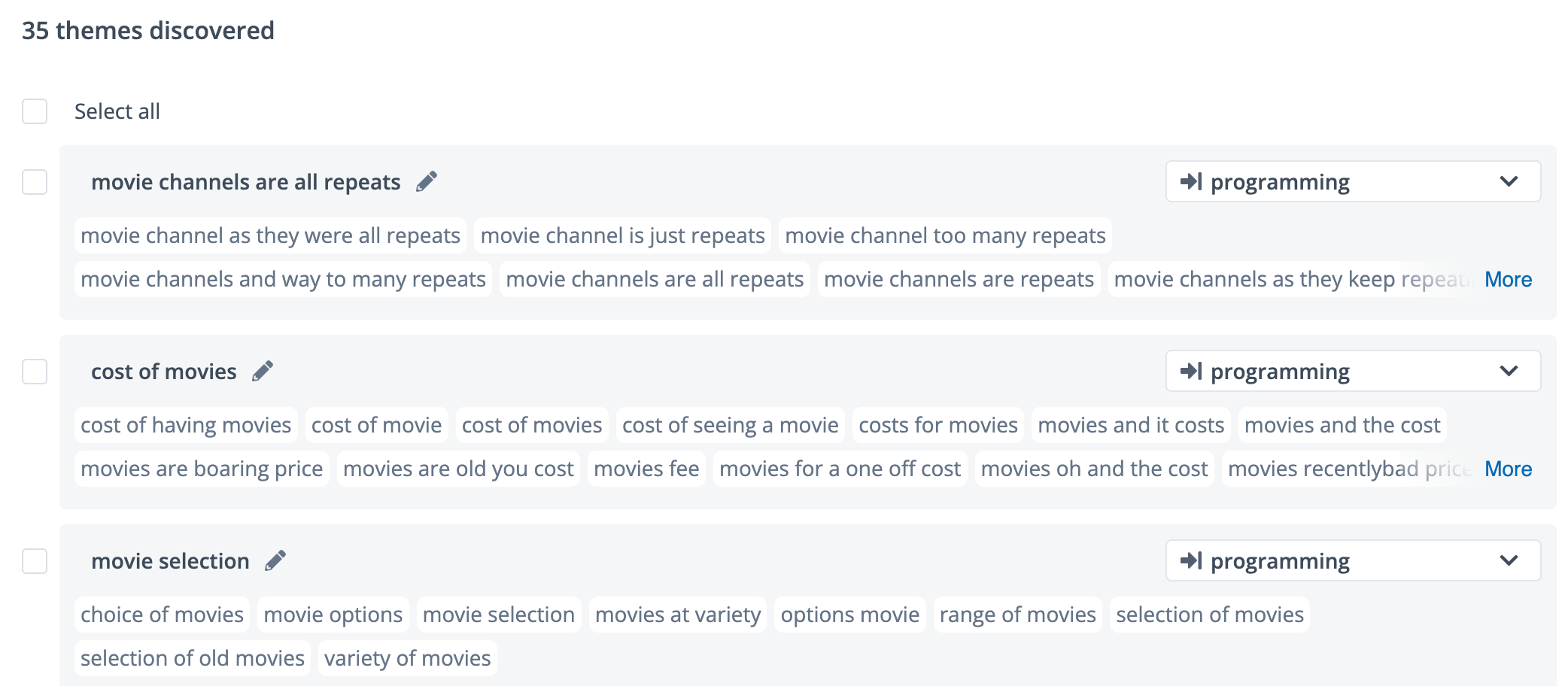

Finding common themes is about finding patterns. AI shines when it needs to tirelessly sift through millions of pieces of feedback. What it doesn’t know is which theme is useful and which isn’t. For example, it might detect that people often mention “quick and easy” which isn’t that useful to know. Or that a time aspect is often mentioned alongside “contacting support”. People are better at deciding what’s useful and what’s not, especially if they have the context of what the user wants to do with the data. “Quick and easy” might be a good indicator that the key marketing message is working!

Organizing themes is important for improving the quality of themes and the ease of working with them. For example, two themes might be discovered which mean the same thing. AI might merge some of them like “helpful support” and “accommodating support”. But a person can also teach it that “goes the extra mile” also falls into the same theme. We can't expect AI to know company-specific facts. For example, which products do we integrate with and which ones are our competitors? This is where AI and user need to collaborate.

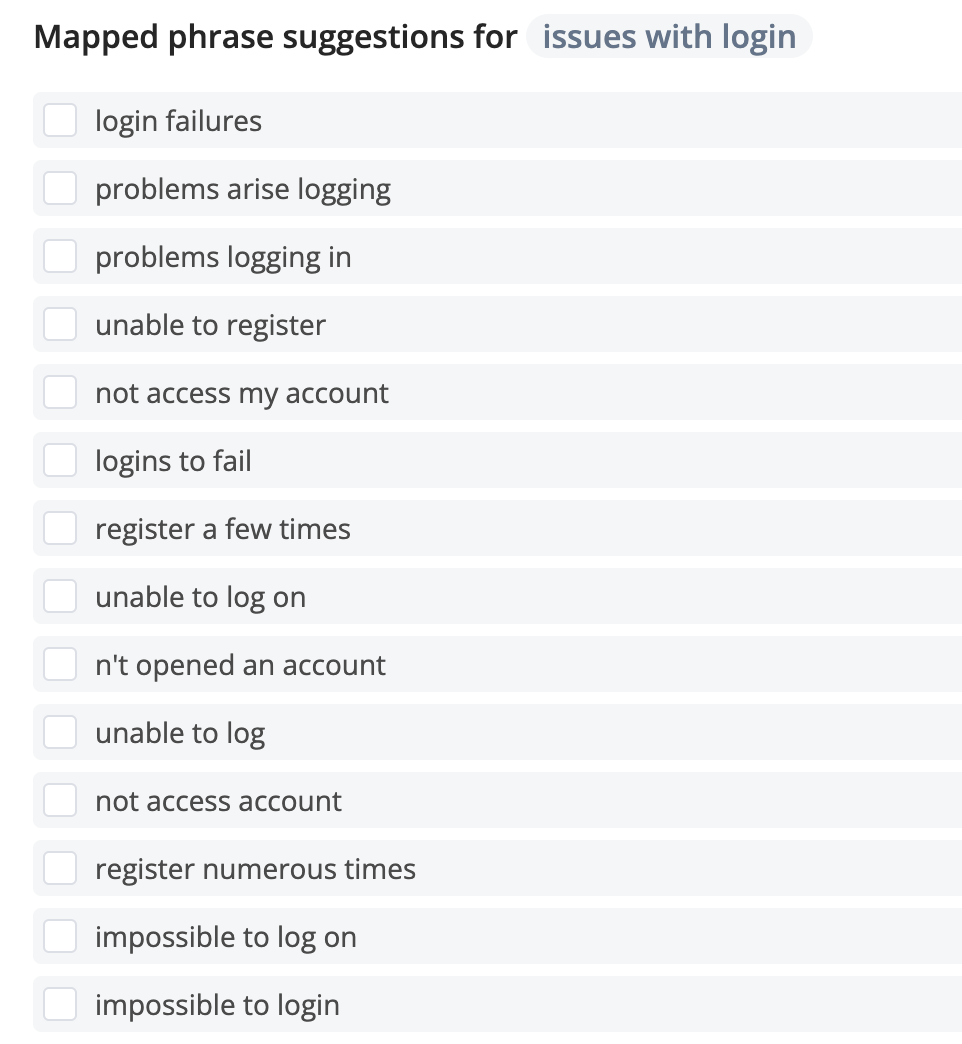

Improving themes beyond what’s been discovered is also common. For example, AI might miss a theme because it’s not as common. But a user might still be interested in tracking it. For example, they might add “issues with login”. AI can suggest hundreds of ways a person might mention this and automatically verify whether it’s in the data.

There’s usually a limit on how many themes are returned. Otherwise, it would be overwhelming. So another example of how AI and a person might work together is to dig deeper into a theme of interest or a subset of the data, which should be prioritized in discovery.

There are different ways of how this is implemented. At Thematic, we decided to focus on a self-service approach. We built a user interface that shows you the AI’s output in every stage and lets you iterate until you are happy with the overall outcome.

Keen to see how you can use Thematic on your own data? Book a consult with one of our team - we'd be thrilled to show you how Thematic works!

Provide a pathway to great results

I’ve talked about the AI-powered vacuum robot in my house. Sure, people might differ in their sensitivity to dirt. But overall, you know when you see a clean house. Analysis of feedback is different. It's significantly more subjective.

If two people analyze the same 200 pieces of feedback, they will disagree. Both are likely to have doubts: Did I miss any critical themes? Did I portray everything correctly? Researchers are especially aware of the bias they bring into the analysis. Luckily, AI can help with this problem.

Again, we first break this down into parts.

What makes a set of themes both accurate and useful?

· Are they specific enough? If 50% of feedback is about installation issues, it’s accurate, but it’s not specific enough to act.

· Did we cover all there is to cover? This is where a lot of solutions with pre-defined taxonomies of themes struggle. They are accurate and specific on some of the data, but completely miss some of the data. If what your company does is unique (e.g. not a standard hotel or airline), these taxonomies might miss the bulk of the feedback!

Believe it or not, but librarians back in the day had to be both specific and exhaustive when writing the catalogue cards for each book.

So where does AI come in? It can be trained to predict how many themes a piece of text should have vs. how many themes were discovered. It can calculate the specificity of a theme.

💡 Specificity is not all about the number of words. Something like “authentication” is more specific than “great product”. It’s about the amount of information (entropy) that theme carries in that context.

Coverage is now about how many sentences are tagged with themes. In fact, shorter feedback tends to have a larger percentage of themes, because it’s more succinct.

Knowing your specificity and coverage metrics is not enough. Ideally, you want to know how you compare to those best-in-class AND how to improve the results.

Three other ways AI can enhance feedback analysis

When it comes to feedback analysis, there are 3 other ways specific AI models can help.

1. Sentiment analysis

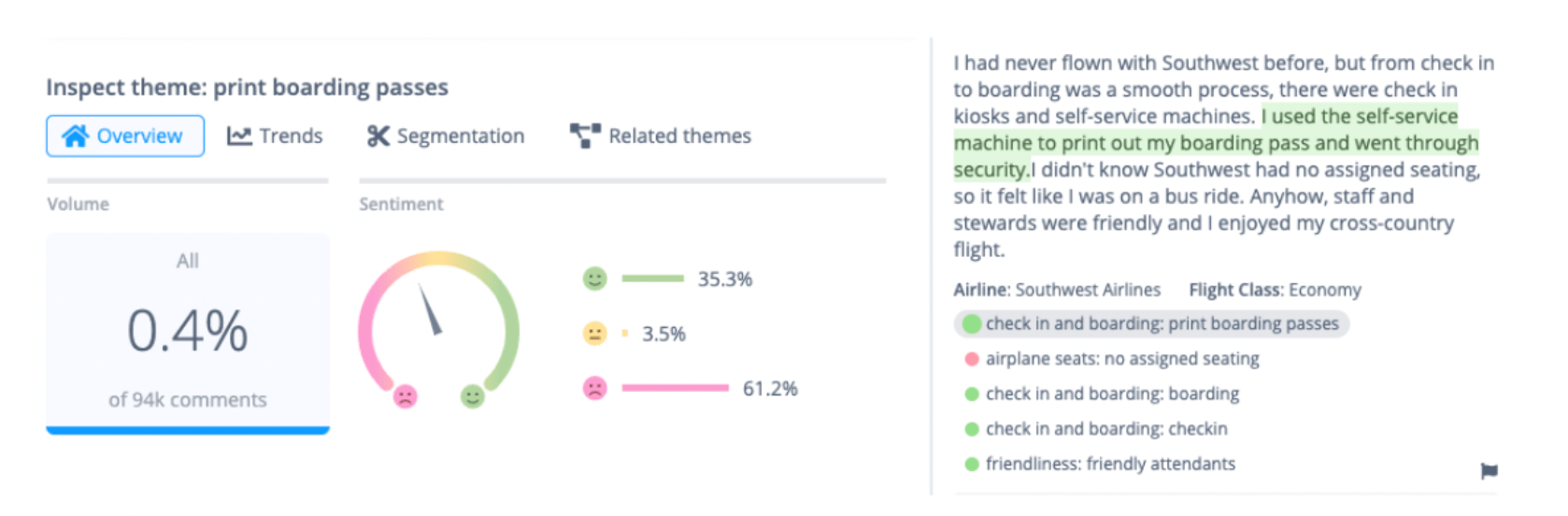

Some themes carry specific sentiment, e.g. “easy to navigate” or “authentication issues”. But majority of themes will have people who are happy or disappointed with that aspect of product or service. For example, a product might have features such as “reporting”, “integration with slack”, “onboarding flow”, and so on. Overall, you want to know: what are we doing well, and which features do we need to improve? Sentiment models can be applied to every part of the sentence linked to a theme to help with this.

2. Feedback type

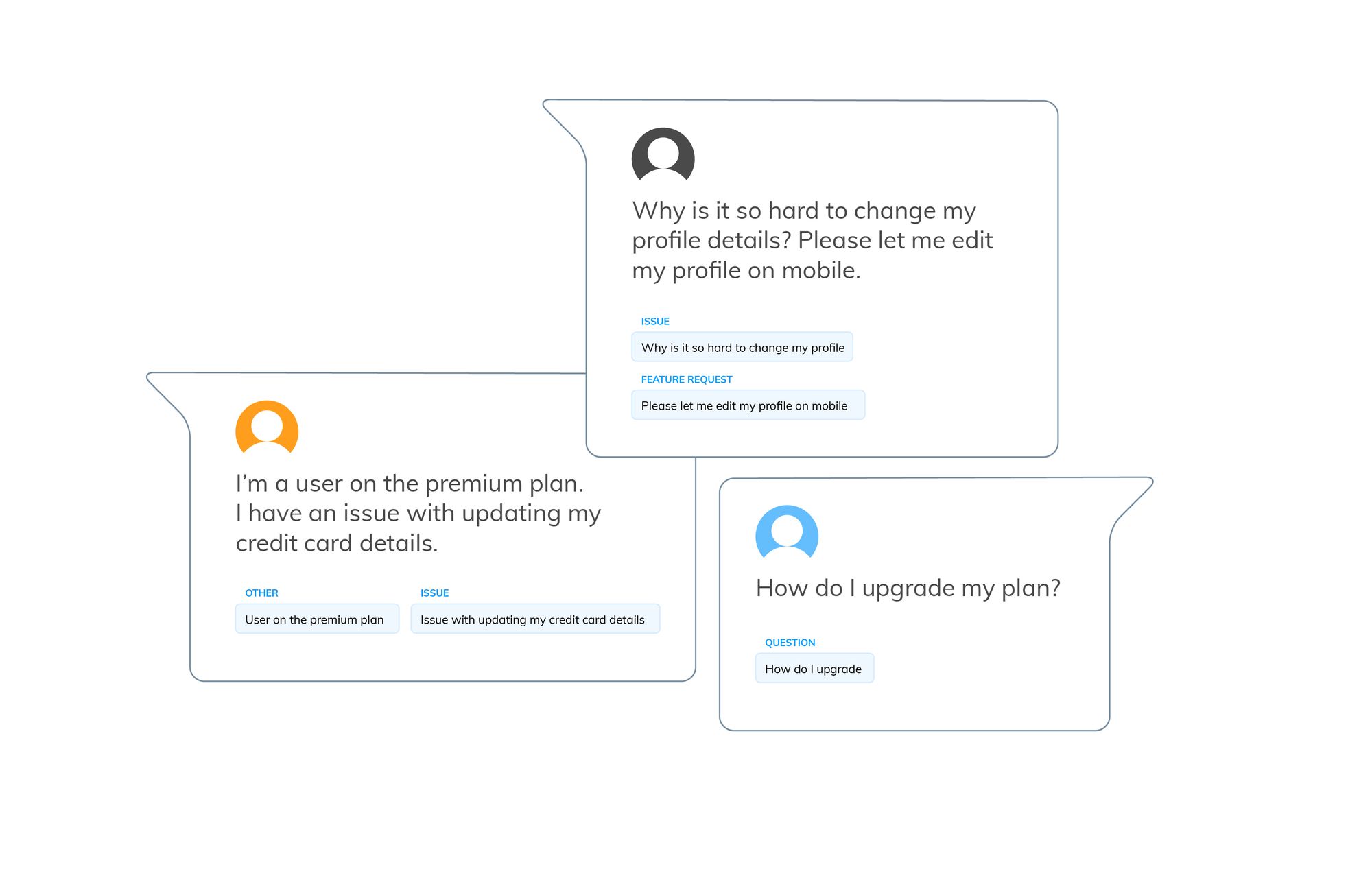

Themes have different importance depending on how they are mentioned in feedback. For example, a person might say “I’m a user on the premium plan. I have an issue with updating my credit card details.” The fact that they are on a premium plan is supplementary information. We can gather it from customer records. So, really, we are just interested in what issues they currently have. This is especially common in support conversations.

AI-models can help detect what type of feedback it is. Is this about an issue, is it a question, a suggestion / request or 'other', i.e. supplementary information.

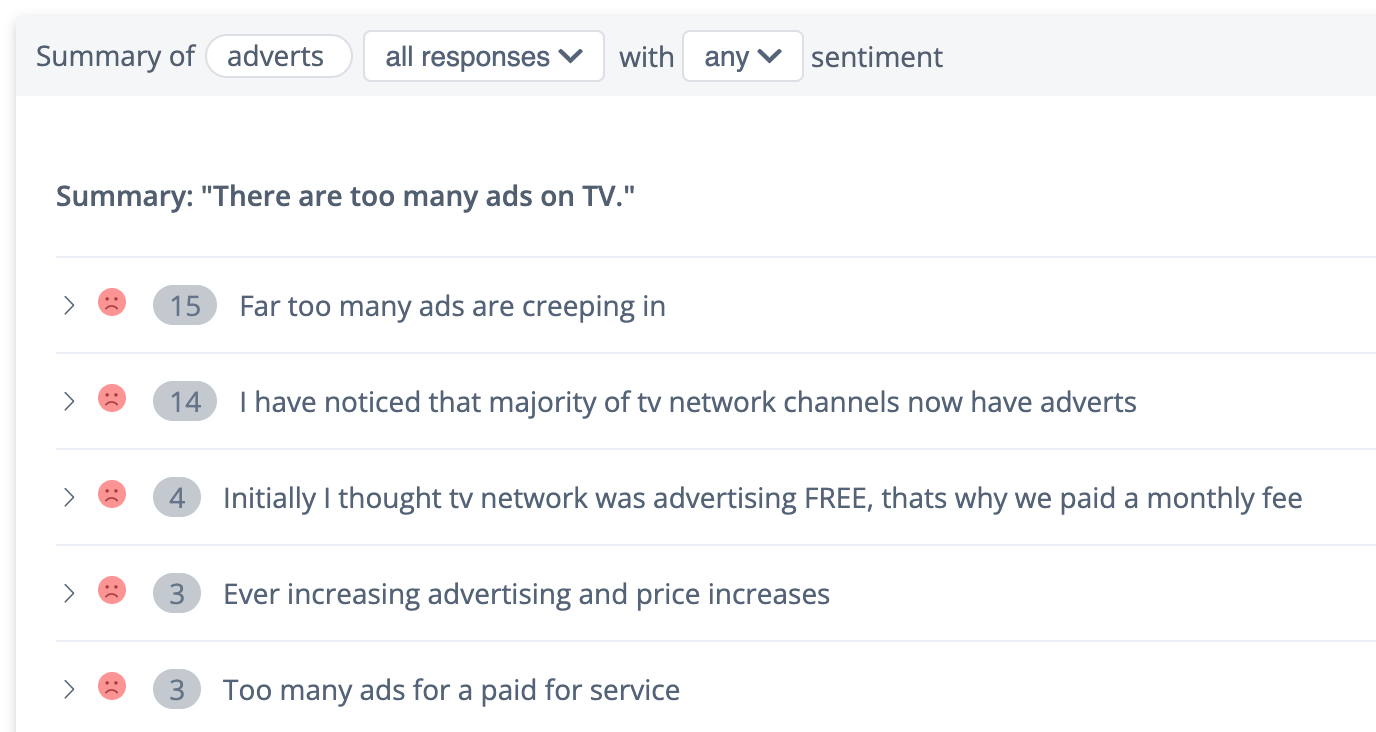

3. Summary of a theme

Let’s say we know that the theme is “credit card”, and we are looking at all feedback of type “issue”. There are might still be too much feedback to read to figure out best action. What kind of credit card issues are most common? This is where AI comes in again. It can cluster feedback based on similarity. It can then present these clusters, so that you can see which ones are most common and dive deeper into those of interest.

Conclusion

When it comes to analyzing feedback, AI is your best friend. But most importantly, you are AI’s best friend, if it’s designed in a way to learn from your feedback.

Ideally, you should be able to navigate feedback like a map: zoom out to see the lay of the land, and zoom in on the parts you are most interested in. It should give you the best route towards customer happiness. And it should help you get your team on board by providing a clever AI colleague who is an expert in insights, just like my vacuum is an expert in cleaning!

Stay up to date with the latest

Join the newsletter to receive the latest updates in your inbox.