From Ignored to Indispensable: The Proven, Step-by-Step CX Insights Mastery Blueprint in < 6 Days! Get free access>

How will GPT-4 change the work of insights professionals? Will it take over the insights and research landscape - or can we use it to our advantage?

For the past 20 years, I’ve studied how computers understand language. My PhD is in this area, and I founded a successful company that uses AI to understand customer feedback. Like many of my peers, I did not think that AI research will achieve what it did with GPT-4 in my lifetime. Last year’s GPT-3 was impressive, but its hallucinations made it unusable for the work of insights professionals.

OpenAI has proved that these hallucinations are fixable. I am now a firm believer that AI will change the work of researchers, analysts and other insights professionals. This post is specifically centered around their needs.

In late November 2022, OpenAI released ChatGPT. Within weeks, 100 million users world-wide were using this technology every day.

Microsoft invested $10B to integrate it into their suite of tools, and promptly launched new versions of Bing and Office365. John Oliver dedicated a full episode to these developments in AI, full of humor and cautionary tales.

In March 2023, ChatGPT’s multi-modal variant GPT-4 launched. The technical paper reveals little about how it works, but provides countless examples of tasks in which GPT-4 succeeds. These include academic and professional exams, where the AI performs on par with the best human results.

Experts agree that a massive economic shift is coming. And it will hit white collar jobs the most, those of knowledge workers. According to GPT-4 itself, roles most likely affected include: Copywriter, Customer Service Reps, Paralegal, Proofreader and Market Research Analyst.

Many people disagree, citing AI’s lack of empathy and other drawbacks that they think matter in these roles. But we also know that historically, those who are underprepared for huge technological shifts tend to miss out. To be prepared for this shift, we have to understand exactly what this technology delivers and how to use it to your advantage.

Large Language Models, or LLMs, are AI technologies that help machines understand and generate human language. They build upon language models that have been around for a long time.

To understand text, these models map every word into a vector space. For example, a language model of type word embeddings knows that the vectors for ‘king’ and ‘tzar’ point roughly in the same direction, but the vectors of ‘king’ and ‘queen’ are similar but perpendicular to each other. To generate text, a language model is trained to predict the next word. And can then be used recursively to generate text.

Large language models (LLMs) go a step further. They are trained on gigabytes of text and generate hundreds of millions of parameters. LLMs use a neural network with many layers to understand the patterns and structures of language. LLMs are additionally trained using reinforcement learning from human feedback. This is where LLM is fed output preferred by human labelers. This helps the model learn how to give accurate and helpful responses while avoiding harmful ones.

OpenAI, the innovator in this space, launched GPT-3 last year as an API. You could pass a question with some context, and receive an answer back. This year it launched ChatGPT, where instead of individual requests, you can have a conversation with the model. It’s currently free and easy to use via a simple interface.

GPT-4 launched shortly after as a multi-modal version of ChatGPT. Its training data includes not just plain text, but also programming code, images, audio, video and other data. The exact mix of training data is not disclosed by OpenAI, but they say that a team of policy makers decide which data is fed into the model.

This approach creates what OpenAI calls “a reasoning engine” that can interact with humans using natural language, pass complex tests, create text in various styles, explain memes, solve puzzles etc.

We don’t fully know how human understanding works. But we know that emotions and intuition build part of our understanding. This is something an LLM has no access to, so there are clear differences. Also, LLMs have severely restricted inputs. So they have knowledge but not experience. They can't touch, taste, feel and until GPT-4 they couldn't “see” either.

That said, LLMs can closely simulate how we use language and how we reason. You can think of an LLM as a smart student (possibly an MBA) who has studied by learning a vast amount of information on all topics that are written about on the internet. Maybe it does not understand everything in exactly the way a human does, but it knows a lot, can analyze data, summarize and explain findings. Hundreds of millions of users benefit from these capabilities already.

In March 2023, Reed Hoffman published a book called Impromptu, which he co-authored with GPT-4! It’s 230 pages and free for anyone to read. In this book, Reed dives into different ways AI technology will change the work of various professions. An early investor in OpenAI, Reed is incredibly optimistic about opportunities that GPT-4 will unlock for teachers, creatives, writers, and even investors like himself. Reed also publishes GPT-4’s opinion on why professionals must be cautious about what’s coming and how they can be prepared.

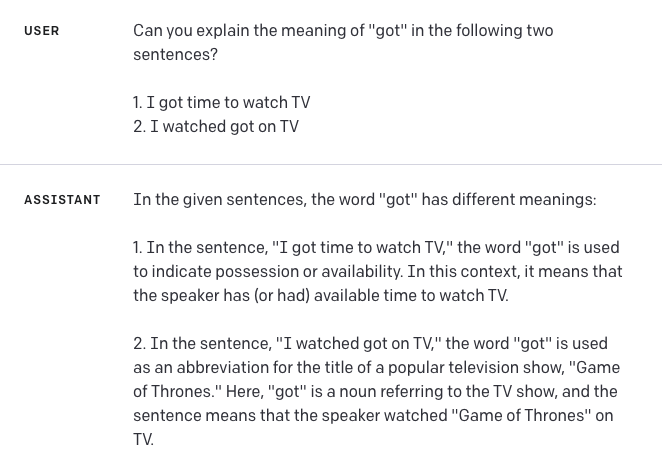

To follow Reed’s lead, here is my conversation with GPT-4 that shows how insights professionals will be affected.

First, the context. You can tell GPT-4 in which mode it is supposed to answer your questions. You can be creative here and choose an angry old man or someone like Agatha Christie. I chose the following:

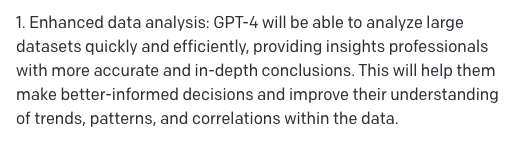

I then asked to provide 5 examples of how ChatGPT will change the work of insights professionals. Here are the answers:

This is interesting but also raises questions. At the moment, GPT-4 has a context window of 32,000 tokens. This means that passing huge datasets to the model is not possible. I asked this question to GPT-4 and it explained that you would need to preprocess a large dataset and only pass on relevant chunks into the model one at a time, or in batches. GPT-4 also pointed out that future models might be able to handle much larger context windows.

Outside of GPT-4, there are many competitors that might handle pre-training and fine-tuning differently. For example, Google have released PaLM, and there is also a company competing with OpenAI called Cohere. You can also run a custom LLM on your own server! Microsoft Azure has a partnership with OpenAI to enable just that.

This is exactly our area of interest in Thematic. Analyzing text data, and especially customer feedback at scale, has been a difficult problem to solve. For years researchers avoided sending open-ended survey questions to customers because analyzing them is hard!

Analyzing social media that’s full of junk is really difficult too. It starts with deciphering brand mentions. Researchers at companies like Genesys, Cruise, Apple and even Tesla have been struggling with this for years. Well, this is where GPT-4 excels! Check out the following example:

Often insights about an entity or a theme are spread across multiple sentences. This is another tricky NLP problem that GPT-4 aces:

These capabilities are foundational to all text analysis. For years, as a practitioner in this field, I have looked for workarounds because these problems weren’t solved. I’m incredibly excited for what’s in store given these advances, and can’t wait to share these implemented in Thematic.

Reports typically contain common questions stakeholders ask. For example, ‘What are the top positives and top negatives users shared in the past month about our product? What are the most common feature requests?’ For small datasets that fit GPT-4’s current context window, researchers now can generate this in minutes. For large datasets, we are automating this in Thematic.

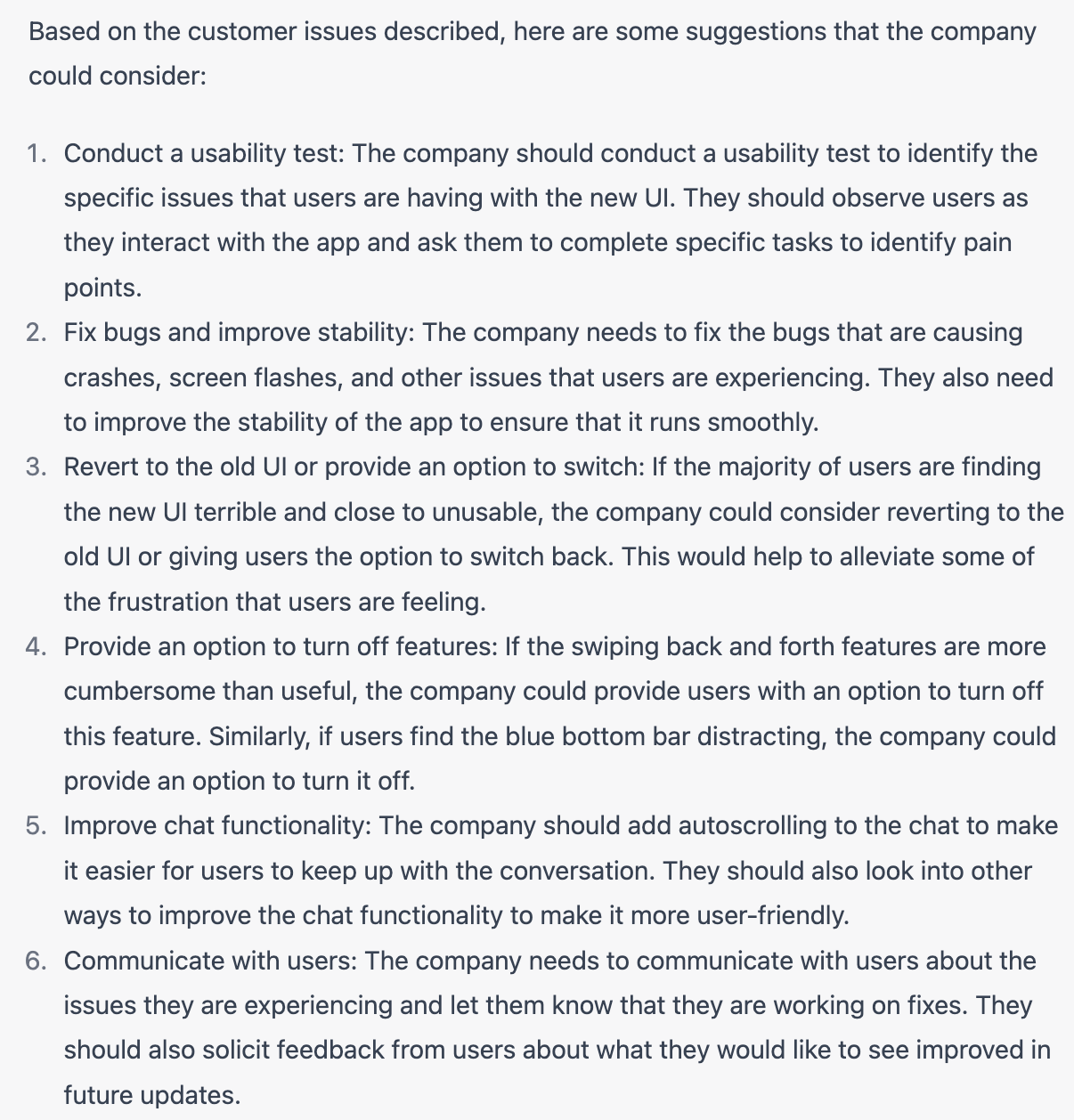

It’s interesting that GPT-4 is saying that insights professionals will be able to spend more time crafting recommendations. For one dataset, Thematic picked up issues with the new UI that customers weren't happy about. I asked GPT-4 to provide suggestions on how to fix them. And here is what it said:

This is incredibly impressive!

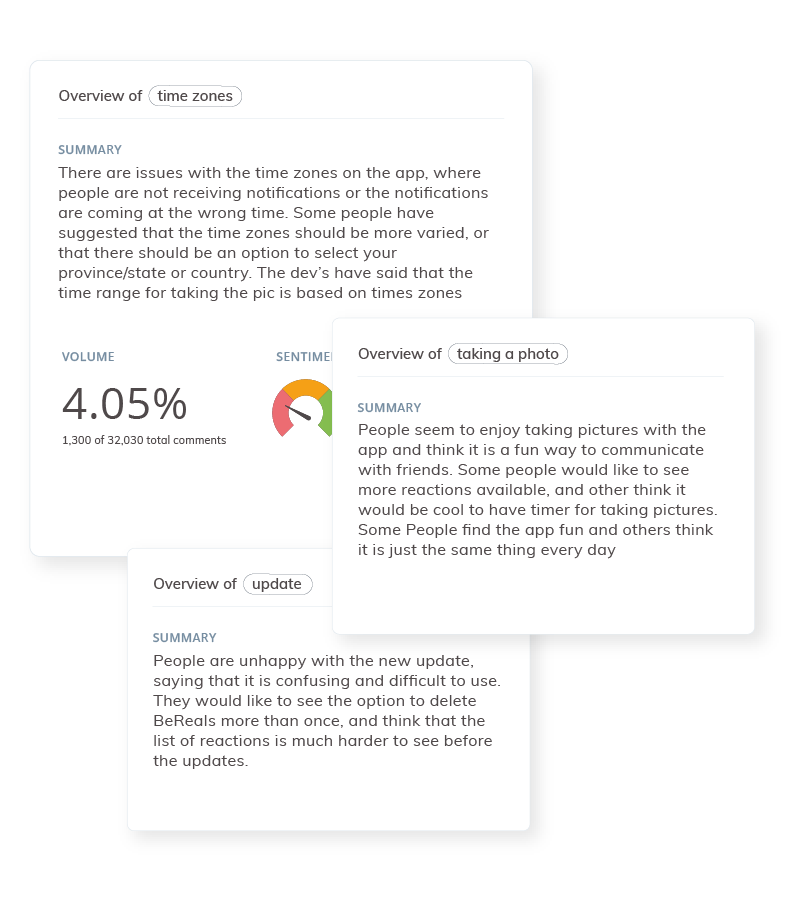

This is really powerful as well. We have launched this as a feature in Thematic and the feedback from our customers has been overwhelmingly positive. Here’s a quick snapshot of of the summaries explaining common themes in one company’s feedback:

You can read more details about it in our product update.

Funnily enough, I asked GPT-4 for 5 examples of how it will change the work of insights professionals but it provided only 4. So I complained!

It’s uncanny to have this kind of conversation, and feels like I have an insights analyst on staff myself!

But speaking of personalized insights for specific stakeholders, one of our customers asked if our solution could answer the following question: “We launched a new tool X, how was it received and what are the top 3 feature requests for this tool?”

Because in Thematic, we know which feedback is about a “tool X” versus something else, and which feedback contains “requests”, we were able to send this data to GPT-4 to help summarize it. Here's what it responded with:

Tool X: Top Three Feature Requests and General Sentiment | ||

The X tool has received a variety of feature requests from users. The top three feature requests are as follows: 1. Allocate X replies to individual senders: Users would like the ability to allocate X replies to the individual sender rather than only having the option to allocate to a team or folder. This would help streamline the process and reduce the need for manual monitoring and allocation. 2. Disable specific X: Users have requested the ability to disable X at the [redacted] level. This would allow practices to control X usage and ensure that only [redacted] relevant X are used. 3. Add Z feature: Users have expressed the need to collect [redacted]. This is particularly important for [redacted] users. Adding feature Z would make it more convenient for users to enter this information.

| ||

For privacy reasons we redacted the name of the tool and specific things that people mentioned about it. But you get the idea.

Finally, I asked GPT-4 if there are any other ways in which the work of insights professionals will be affected:

For the past few years, we’ve all been learning so many new skills, constantly adapting to new technologies and new ways of doing things. This will only escalate with the advances of AI.

Thankfully, LLMs can help by providing advice. I asked GPT-4 to help with this, but because it usually provides the answer in its wooden English, I had to ask it summarize it in simpler terms:

Interestingly, it recommends learning programming languages like Python and R! It can actually help with this too:

I know how to program in Python and spotted that the answer was incomplete. So I asked what the query should be. It apologized for the incomplete answer and provided the query information:

As you can see, you can get quite technical with GPT-4, or you can keep it high-level.

There is no doubt that AI will change the role of insights professionals. But it will also change the role of insights and their impact on businesses. Insights will become much more accessible to not just analysts, but also everyone who needs them: marketers, product builders, managers, executives.

Right now, when someone from marketing needs to understand drivers of satisfaction, they ask the insights department to run a specific survey for this or a focus group. With the latest advances, they’ll be able to interact with the AI to get these questions answered within minutes from all the data made available to the model.

Democratizing insights means making them accessible to everyone in the company in the right way. But just because people can interact with data directly, it does not mean that they will. Some will continue to ask for reports and adhoc questions, which insights people will be able to deliver faster and with less grunt work.

The more insights companies consume, the more data-driven they’ll become. The importance of insights will keep increasing and so will the importance of insights professionals.

I hope my article encourages you about all the possibilities AI can unlock for those who work in research. We should all invest time into learning how to use this technology.

The good news is that you don’t have to have a PhD in AI to use it. In fact, having a PhD in this area is a bit of a disadvantage. I keep underestimating AI’s abilities, because of all the things I had to teach the AI algorithms I’ve developed over the years.

While it might seem scary for all the things AI can do as well as a human, and even better than some, it’s still great news! It continues to automate repetitive and non-creative tasks most of us don’t like doing.

Your understanding of the business, the context in which the research is conducted and the output that will convince stakeholders will be critical. AI is not here to take your job, but give you back the time to work on interesting tasks. It’s here to give you superpowers!

Join the newsletter to receive the latest updates in your inbox.

Transforming customer feedback with AI holds immense potential, but many organizations stumble into unexpected challenges.