Generative AI and Feedback Analysis: security and data privacy concerns

How can you use generative AI safely? In this post, Alyona covers security concerns around generative AI, and addresses how to lower risks.

You might still remember the AI Winter. Back then people’s concerns were about AI’s delivery on its promises. This has since shifted. The majority of people, including AI experts, are surprised at just how much AI can deliver technologically. We know AI works, and we know that it’s the future. But how do we do it right, and how do we get started?

One of the biggest questions we hear from companies is how to use AI safely. Samsung has banned the use of ChatGPT after a major data leak. Others are restricting what AI can be used for. There is a lack of clarity around where the data is sent, how it is used, how it is being stored, and what the other security risks are that people need to be aware of.

There are other valid concerns when using AI, such as bias and ethics. In this article, we’ll focus on security because this is the more common concern of our customers: analysts and researchers who work with customer feedback.

Generative AI - 101

Knowledge is power! Let’s break down a few common terms related to Generative AI:

- OpenAI - A pioneering company in this space

- GPT - A large AI model created by OpenAI. It was trained on large volumes of data to interpret and generate text, images, video and audio data. The latest model is GPT4 and is available to developers via OpenAI and other servers.

- LLM - A large language model, an AI model designed for analyzing and generating human-like text. GPT is often referred to as an LLM.

- ChatGPT - An interface that OpenAI released that lets anyone ‘chat’ with GPT.

- Prompts - Relatively short snippets of text sent to a GPT or an LLM to perform a task. Most commonly prompts are questions, but they can also include a data sample as supplementary info (context), or to be re-used in the output.

Tasks that large Generative AI models can perform:

- Generation - Given a question, an AI model generates an answer by predicting the most likely sequence of words. Examples: suggesting titles, writing blog posts, generating code, rap songs in the style of Eminem, or playing Dungeons and Dragons.

- Data transformation (Summarization / Reasoning) - Given a data sample, an AI model generates an answer by analyzing this data first. It can also transform the data into something else. Examples: cleaning data, translating code into a different programming language, summarizing a support chat.

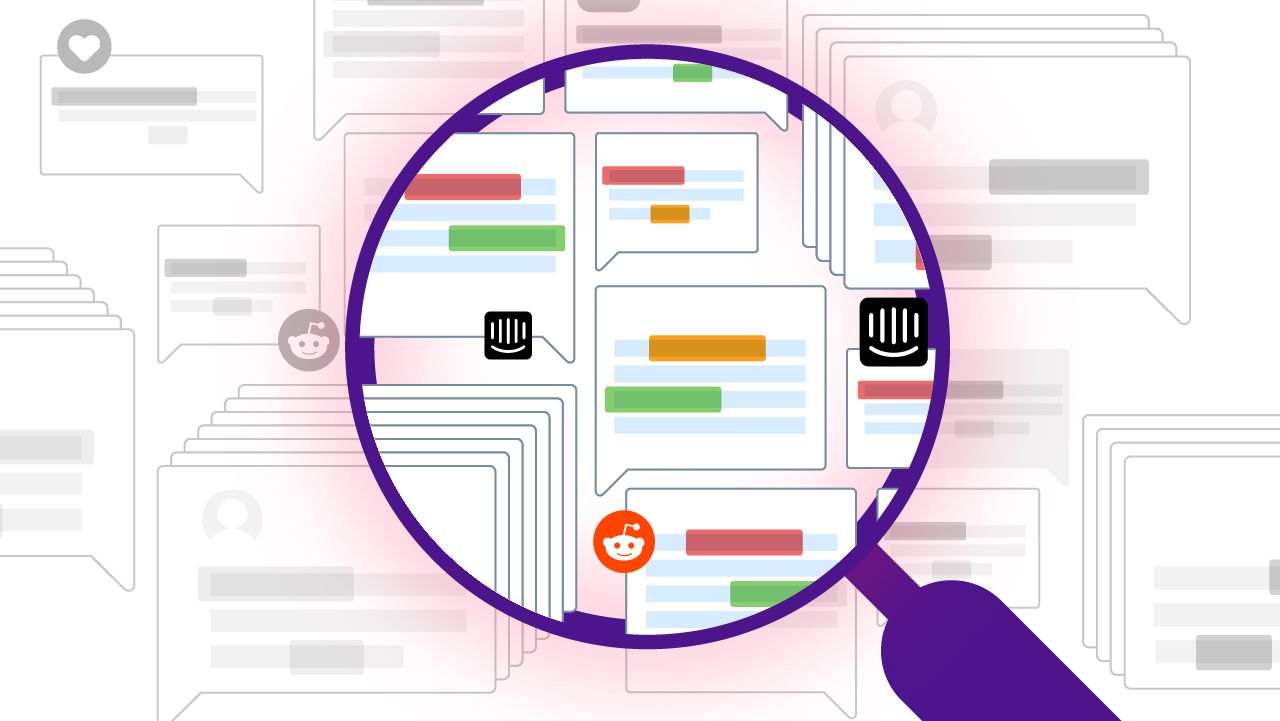

- Agency - A chain of LLMs that choose which direction to go in. Used for complex task completion. Examples: Wolfram Alpha app on ChatGPT, which uses introspection to make sure it understands the context correctly, or a use case where an LLM can not only generate computer code but also execute it.

Generative AI models usually run on servers in the cloud, although some models are now available to private clouds or even on local machines. OpenAI offers its GPT models via their own servers, which is also where ChatGPT runs. Microsoft Azure offers the same OpenAI GPT models on their own servers, which are already widely used by enterprises.

Concerns about using Generative AI in work settings

Looking over our breakdown of common terms and the capabilities of AI models, you can already imagine that some tasks are more risky than others.

For example, let’s say you want ChatGPT to help you gather feedback from customers. If you ask it simple questions like “What are five ways to get high-quality feedback post call center interactions?” or “List three different ways to ask customers about their recent experience, as well as pros and cons of these approaches”, you get great ideas and fast research answers with very little risk of exposing any data.

But if you post a full thread of customer chat into ChatGPT to understand the intent of that customer conversation, things become risky. You might be sharing personal info, such as the address or credit card mentioned in the thread. You are exposing customer data to third parties without customer consent.

There are many use cases in between that have various levels of risk. For example, if an algorithm sends the same customer chat thread to an LLM hosted on Microsoft's highly secure cloud service Azure, AND the company or team owning that algorithm is security compliant, the risk is significantly smaller.

There are three key variables that influence the risk levers when working with vendors offering Generative AI:

As you can see, nuanced understanding is required as there are grey areas here. Talk to your data security team to de-risk your use of LLMs.

How we approach LLMs at Thematic

Being an AI expert myself, and as the CEO of Thematic, I instantly recognized the potential of this technology to speed up analysis of data, create reports and answer questions about customer needs and wants.

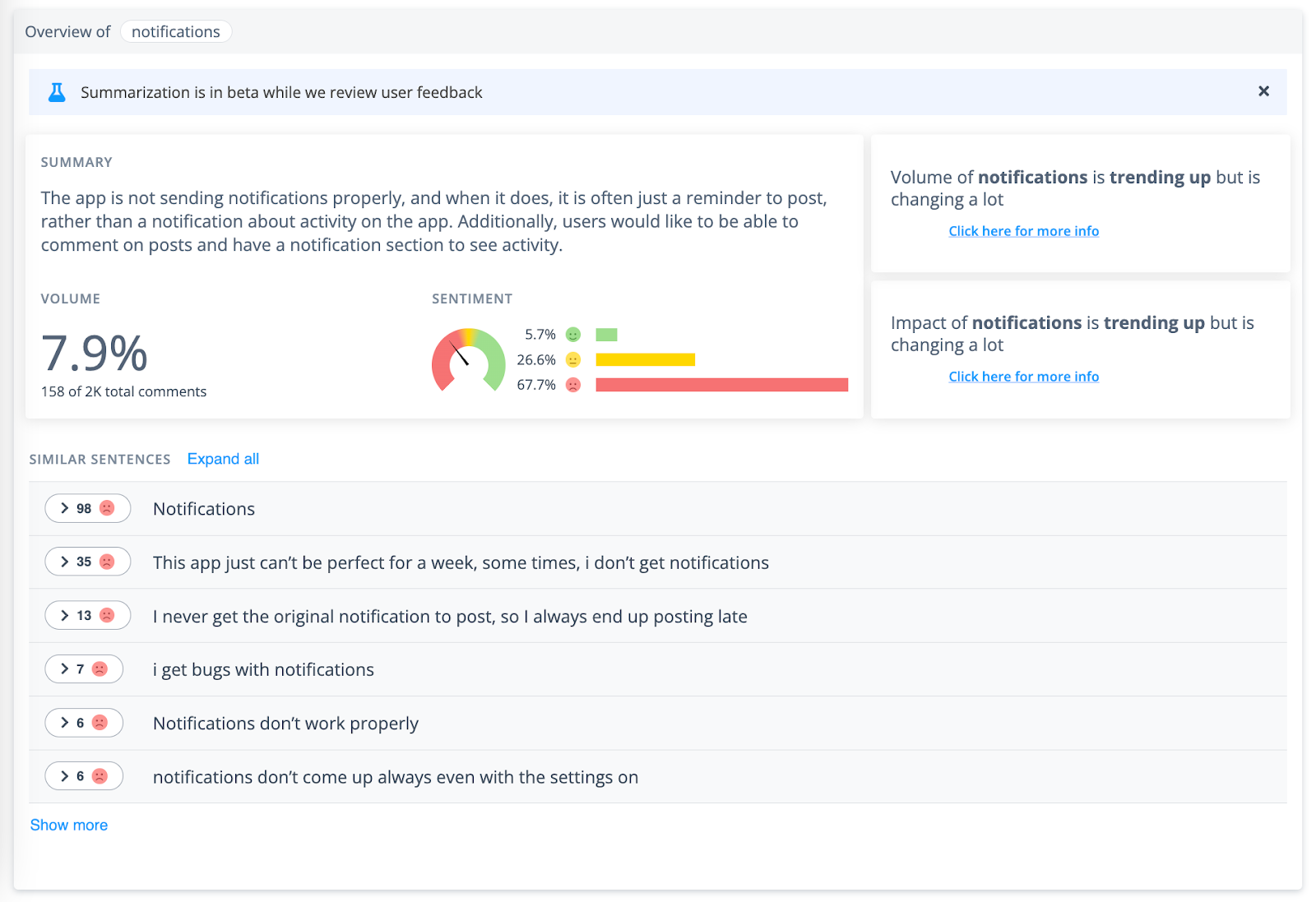

We already use Large Language Models extensively in various parts of our product: both customer-facing and internal ones. Our first customer-facing feature using LLMs, Theme Summarizer, has been really well received. “It’s gold, it saves me huge amounts of time”. That said, data privacy is top of mind for our team, and our customers.

Here’s how we address each risk lever:

Prompt

Thematic has a proprietary AI that detects themes, sentiment and categories in feedback. Given a customer chat, our algorithms know which sentences represent actual feedback from the customer vs. supplementary info, such as an address or other PII.

This gives us a huge advantage over other providers. They have to send all customer feedback to an LLM to analyze it, whereas we carefully select only relevant, high-quality data to include in the prompt. As a result, we greatly minimize the risk of any data leaks.

Compliance

We’ve worked with large enterprises for years. PwC did an extensive review of our data privacy and security practices, and concluded that we are safe to work with.

We undergo an annual security review, where we have to prove that we have the right processes to keep our customers’ data secure. In other words, we are SOC 2 Type II certified and GDPR compliant, ensuring that we follow enterprise-standard security protocols to protect customer data.

LLM Hosting

We currently use LLMs hosted on Microsoft Azure, and exploring alternatives. It's critical that their servers are run by teams that are SOC2 certified and GDPR compliant. We need to specify the location of the server as well. So for companies that need to keep the data safely within a particular region there is no risk of losing that compliance.

Final thoughts

If you have played with ChatGPT or its many cousins, you will know that it’s not a “new shiny object” and it’s not a “hype”. You know that it will soon transform the way we work. The best thing you can do is to ride the wave of what’s possible and use AI-powered tools to your advantage. But of course, beware of pitfalls. Especially, if you work with customer data.

At Thematic, we’re always going to be on the cutting edge of tech, to bring our customers the best insights, as fast as possible - but never at the cost of compromising customer data or forgetting about data security.

Stay up to date with the latest

Join the newsletter to receive the latest updates in your inbox.