From Ignored to Indispensable: The Proven, Step-by-Step CX Insights Mastery Blueprint in < 6 Days! Get free access>

Why you should choose open-ended comments, and conversations, over rating scales.

This article is Part 1 of our three-part series on Open-Ended Questions. Part 2 explores "How You Should Use Open-Enders in Your Surveys" and Part 3 covers "How to Use Open‑Ended Feedback Across Your Organization".

Companies use CX surveys to figure out what’s working and what’s not. But are they really learning what matters most to customers?

Most of these surveys rely on ratings. Customers score experiences with a number. But that number often lacks context. Teams end up guessing what a 6 or 7 really means. Meanwhile, most customers don’t even bother responding. Only 7% respond. That means 93% of voices go unheard.

This wasn’t always the case. In the early 2010s, as email surveys scaled up, numbers were easier to track. Open-ended responses were simply too slow to process. But AI has changed that. Now we can analyze open-ended feedback just as quickly and with far more depth.

With the right tools, companies can hear the whole story. They can see the themes, urgency, and emotion behind each response. It’s faster, smarter, and finally focused on what really matters.

93% of CX leaders rely on survey-based metrics such as Customer Satisfaction Score (CSAT) or Customer Effort Score (CES) to measure performance. Yet only 15% of those leaders said they were fully satisfied with how their companies measure CX. A mere 6% expressed confidence that their measurement systems support both strategic and tactical decision-making.

The shortcomings go deeper. CX leaders pointed to low response rates, slow data turnaround, ambiguity about what drives performance, and a lack of linkage between feedback and financial outcomes as critical flaws. These gaps highlight a system that struggles to capture real insight in real time.

McKinsey's findings show that today’s survey systems are often too limited, reactive, ambiguous, or unfocused to meet the demands of modern CX. The accompanying infographic below illustrates this clearly. Each quadrant, Limited, Reactive, Ambiguous, and Unfocused, maps the core flaws CX leaders experience in trying to capture and act on customer insights.

The graphic visualizes the statistical weaknesses and underscores the strategic disconnect companies face when relying on outdated measurement tools. That’s why many organizations are looking beyond rating scales and toward open-ended feedback as a more authentic and actionable alternative.

Surveys tend to be a comfort blanket. They give you something to hold onto, but they often miss the broader context and root causes behind customer feedback.

In practice, surveys often isolate one detail, like an interaction with an employee, without allowing customers to comment on the broader issue that caused their frustration. One example is a customer who gives a poor score after a call with an agent. The problem is a broken system rather than the person who helped them. The survey doesn't capture that context.

Ratings-based surveys were built for a time when customer experiences were simpler and expectations were lower. They’ve since become a default, but a dated one. Fixed-response formats are easy to analyze and scale, but they don’t match the complexity of modern CX.

First introduced in the 1930s, Likert scales let people indicate how strongly they agree or disagree with a statement. Their simplicity made them popular. Tools like NPS evolved from this model by pairing a rating with a comment box.

But the rating-based survey format has limits. Ratings reduce emotion to a number. Follow-up comment fields are often skipped or treated like an afterthought. Customers know these responses won’t lead to change, so they disengage.

That leads to three key issues:

Many surveys end up being box-ticking exercises sent quickly, but are rarely useful. Disengaged customers click through without thought or abandon the form altogether. The result is skewed metrics, lost context, and decisions made in the dark.

Traditional rating scales were developed to bring structure and perceived objectivity to feedback collection. But with the rise of AI and advanced analytics, their relevance is rapidly declining. With today’s AI and LLMs, companies can analyze open-ended comments and accurately predict how someone might have answered a rating scale, without ever needing to ask.

Studies show that transformer-based models can generate synthetic NPS and other scale-based metrics from open-ended responses. These models use NLP to estimate what score a customer might give based solely on their written or spoken comments. The resulting synthetic score is then validated against actual customer ratings.

NLP models, including transformer-based architectures, can now generate synthetic NPS and other satisfaction scores from open-ended comments with high accuracy. Some models have achieved Pearson correlation coefficients of 0.85 or higher, strongly aligning with actual customer ratings. This means modern NLP isn't just approximating scores but capturing real sentiment nuances and intents, often on par with, or better than, traditional rating scales. Clearly, AI can understand and quantify customer sentiment using language alone.

In some cases, this level of predictive power rivals or even exceeds the reliability of traditional rating scales. The technology does more than infer a number; it captures the underlying emotion and meaning in each comment.

That’s not just a technical milestone. It marks a shift in how we gather and interpret customer insight. With AI models now able to reliably extract sentiment and intent from open-ended feedback, the need for numeric-only responses fades. This development unlocks real benefits: organizations can simplify surveys, reduce friction, and capture richer context. It means fewer barriers for customers and more clarity for decision-makers.

When synthetic scores from open-ended feedback prove reliable and win trust across both data and business teams, the need for asking rating questions directly starts to fade. AI can now capture nuance, emotion, and context without forcing customers into a 0-to-10 box. That’s a major evolution in how we understand and act on feedback.

Customers want more than transactions; they want conversations that feel meaningful, supportive, and human. Rating scales, by design, offer limited space for that kind of depth.

According to a 2019 Trustpilot survey, 61% of consumers expect brands to publicly respond to their feedback. Customers want responsive and empathetic engagement over detached, promotional messaging.

A more recent report from Intercom said 86% of consumers are comfortable when brands communicate in a tone similar to how they talk with friends and family. And the latest Sprout Social report reinforces this: 64% of consumers want brands to connect with them on a more human level, not just try to sell to them.

Gen Z, in particular, places a premium on authenticity. They expect brands to be transparent, values-driven, and honest in their communication. They’re also quick to disengage from brands that come across as inauthentic or self-serving.

This preference for informal, relatable dialogue points to a major limitation of rating-based feedback. It’s not a conversation. It doesn’t respond, explore, or empathize. It captures a number but misses the moment. There is no connection.

Open-ended feedback works differently. It gives customers space to speak in their own words, share their priorities, and feel heard.

Brands often prompt customers to respond to narrow, predetermined topics or conversations customers may not even want to have. In contrast, open-ended questions invite a different kind of dialogue. They allow customers to say what's truly on their minds, whether that's their experience with an employee, a broader frustration, or even something unexpected. It's an open door, not a checklist.

When brands treat conversations as opportunities to connect on shared values and support customers emotionally, they build stronger, more trusted relationships. As Sprout Social found, 44% of consumers say they feel more connected to brands that engage in relevant conversations.

Authored by Alyona Medelyan (PhD in Natural Language Processing & Machine Learning), this is a complete guide on the analysis of qualitative data. Learn the key approaches to analysis, how to set up a coding frame, how to code data accurately, and much more.

Download your free copy today!

Open-ended questions don’t just gather data; they open the door to dialogue. They create room for reflection, emotion, and authenticity. In the right hands, they turn surveys into stories.

Asking open-ended questions rather than relying solely on scale-based prompts is a first step toward a more mature Voice of Customer (VoC) approach. It shifts the goal from measurement to meaning.

A Likert scale might tell you that customers are "somewhat dissatisfied" with your onboarding process. Useful, but limited. But an open-ended response might reveal: "The rep was nice, but I had to watch three separate videos to understand the platform. It felt disjointed and overwhelming." One gives you a number. The other gives you a story, and the context behind the frustration.

That context matters. A number might help you track trends over time, but it won’t help you fix what’s broken. The open-ended response points directly to the experience that caused the dissatisfaction. For the business, that means actionable insight: simplify the onboarding content. For the customer, it means feeling understood instead of reduced to a rating.

Pairing a single open-ended question with a Likert scale can be a thoughtful starting point. For example, asking a customer to rate their experience and then following up with a prompt like "What’s the main reason for your score?" provides a familiar entry while encouraging more candid, useful feedback. However, it's important not to overdo it. Asking open-ended questions after every rating can exhaust respondents and lead to lower-quality responses.

True maturity in voice-of-customer research means moving beyond this as a one-off. It’s about treating open-ended responses not as afterthoughts, but as the core of how you understand and engage with your audience.

It's understandable why some organizations hesitate to rely on open-enders alone. Before automated analytics, this kind of data was time-consuming to process and analyze.

Back in the early 2000s, I used what I jokingly called “manual AI.” I’d copy every open-ended response into a spreadsheet, read through them line by line, and categorize each one myself. It was slow and tedious, but that process exposed the raw, unfiltered truths that rating scales just couldn’t surface. Those comments sparked real conversations and pointed to what actually needed fixing.

Today, AI tools can do the heavy lifting, analyzing open-ended responses at scale. But the purpose remains the same: to open a channel where customers can speak freely and be heard. The difference now? There's no pain or exhaustion standing in the way. There's simply no reason not to ask.

Let’s look at LinkedIn’s Sales Navigator team. Like many companies, they had tons of survey data (plenty of NPS scores), but they couldn’t always tell what was driving the changes. One of the most important features for their users was search. But feedback on it was all over the place. They knew it mattered, but they didn’t know why it was helping or hurting satisfaction.

So they turned to open-ended comments. And instead of trying to manually sort through mountains of feedback, they used Thematic’s solution to surface the patterns automatically. That shift let them:

With clearer insights, the product team could make smarter decisions, ultimately improving product satisfaction by 23%. They could zoom out for executive reporting or zoom in for deep-dive analysis, and the feedback connected directly to their closed-ended data.

As LinkedIn researcher Allison Schoer put it, “The use of frameworks provides consistency—and at the same time, also reinforces themes that we're seeing in our closed-ended data.”

It’s a solid example of what happens when open-ended feedback isn’t treated as noise or an afterthought. It becomes the bridge between what people say and what actually gets improved.

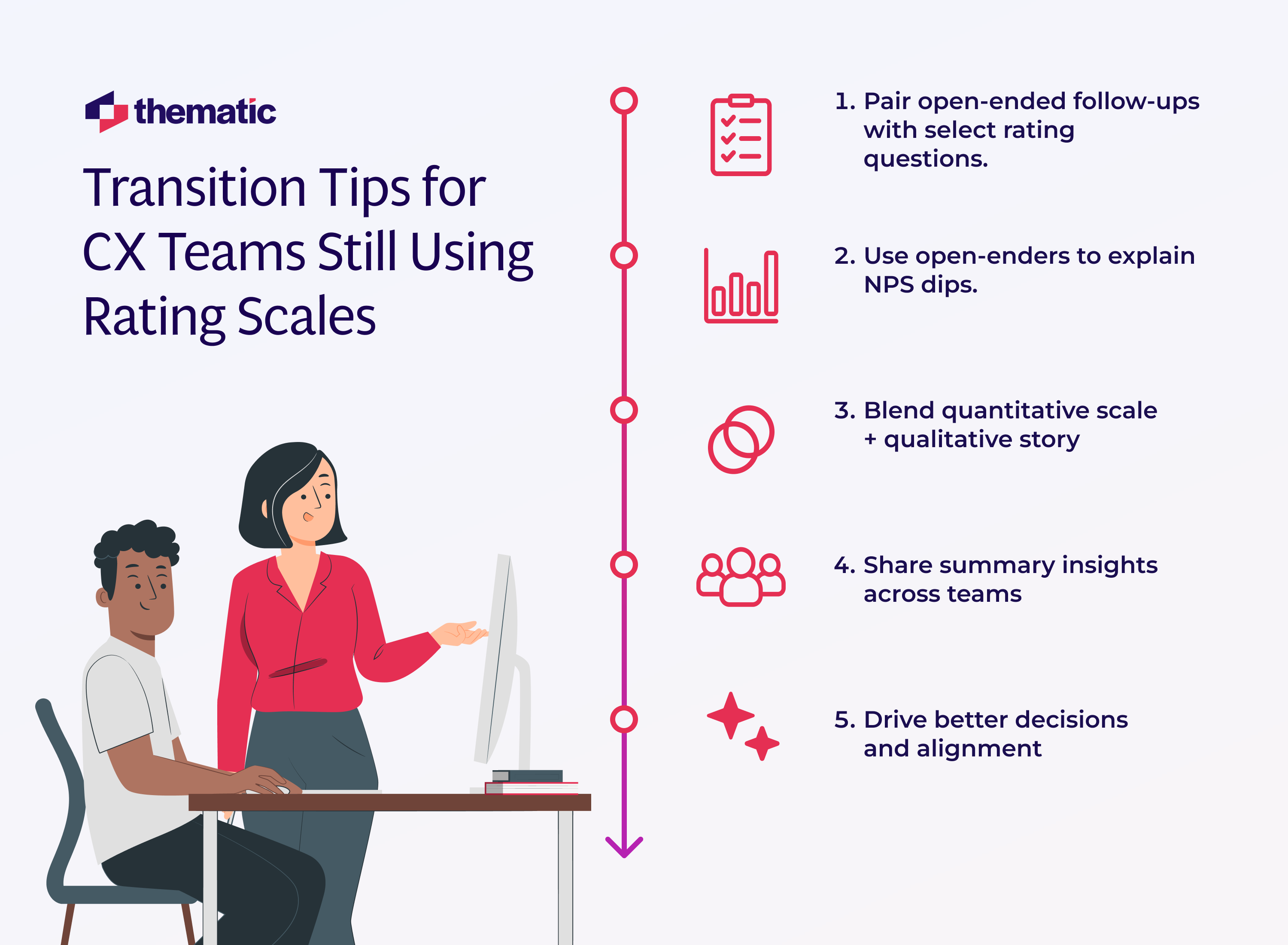

Still using rating scales and not sure how to move beyond them? Open-enders don’t need to replace your existing surveys overnight. They can complement them, helping you get more from the feedback you’re already collecting.

Take LinkedIn, for example. Their research team didn’t abandon closed-ended questions. Instead, they used open-ended feedback to validate and deepen the insights from their NPS scores. When they saw dips or shifts in the numbers, the qualitative feedback helped explain why. Thematic analysis made it easier to pull together a cohesive narrative from both types of data. That mix—quantitative scale + qualitative story—meant stronger alignment across teams and better decision-making.

In her webinar, LinkedIn researcher Allison Schoer explained that this combined view allows researchers to reinforce patterns they’re already seeing in closed-ended data while drawing out the details that only come from verbatims. She also highlighted that using Thematic made it easier to prepare and share summaries of those insights with stakeholders across the business, getting everyone aligned on customer priorities.

If you're starting small, the GLG guide on open-ended survey design recommends:

Moving to open-ended feedback doesn't just change what you ask; it changes how you work with the answers.

Text analytics makes it faster and easier to get actionable insights from unstructured data like reviews, emails, or survey comments. Using AI built to understand language, these tools can:

One of the most powerful outputs of text analytics is themes, or recurring patterns or topics that show up in feedback. Instead of wading through thousands of individual comments, you get to see the big picture. You understand what customers are actually talking about, whether it’s “wait times,” “checkout flow,” or “helpful support.”

These themes do more than summarize; they give context to what customers expect and experience. You can feed them into driver analysis to see what’s actually impacting loyalty or churn, and use them to inform decisions across the organization.

That’s where building capability comes in. CX teams need to help decision-makers understand how to interpret open-ended data. That means showing them not just what a theme is, but how it ties back to strategic goals. Once leaders are comfortable, you can expand the reach. You can bring in marketing, service, ops, or everyone who deals with unstructured data that needs to be acted upon.

As previously mentioned, only 7% of customers ever respond to surveys. Text analytics gives voice to the 93% of customers who don’t respond. It helps you move beyond disconnected ratings to stories that spark action. With the right tools and mindset, it becomes the backbone of a stronger, more responsive voice-of-customer program.

You’re already sitting on a wealth of open-ended feedback. You just need the right tools and a team ready to listen, and you can finally use that feedback to make real improvements faster, smarter, and with more confidence.

Yes, these insights don’t just fix problems, but more importantly, they fuel innovation. Many teams use open-ended responses to guide product development, improve service flows, and refine policies. For instance, analysis of open-text feedback has helped companies identify unmet needs, validate feature ideas, and discover new use cases, all directly from customer narratives.

You’ve seen the data. Heard the experience. Followed the examples.

The bottom line is that open-ended feedback gives you more than a score. It gives you the story behind it. That story isn’t always pretty. It can be a rant, a compliment, or a cry for help. But with the right AI-powered tools, it turns into patterns, themes, and clarity.

So here’s the takeaway: Start small. Add just one additional open-ended question to one of your surveys. See what it reveals. Let that answer guide your next move. Once you hear the real voice of your customer, it’s hard to go back to numbers alone.

Curious to see what that looks like in practice? Request a demo of Thematic and discover how your open-ended responses can lead to smarter, faster decisions and better experiences.

Read more about Open Enders with Part 2 of our series exploring "How You Should Use Open-Enders in Your Surveys" and Part 3 covering "How to Use Open‑Ended Feedback Across Your Organization".

Join the newsletter to receive the latest updates in your inbox.

Transforming customer feedback with AI holds immense potential, but many organizations stumble into unexpected challenges.