From Ignored to Indispensable: The Proven, Step-by-Step CX Insights Mastery Blueprint in < 6 Days! Get free access>

Learn how to apply deductive thematic analysis—build a theory-first codebook, tag feedback at scale, and prove ROI inside Thematic.

.webp)

When you're sifting through customer feedback with a strong hunch about what's driving dissatisfaction, deductive thematic analysis is your go-to approach.

It's a theory-first method of qualitative data analysis. You start with predetermined themes or a hypothesis, then look for patterns in the data.

By contrast, an inductive thematic analysis approach lets the data reveal the themes.

When does theory-first beats exploratory coding?

Say a product team suspects "response time" issues are hurting their support ratings. Using a deductive approach, they start with "response time" as a theme and tag all related comments.

Having a hypothesis saves hours. You're zeroing in on known problem areas instead of wading aimlessly.

In thematic analysis, neither approach is "better" universally. A deductive framework excels when you have specific questions to answer or existing theories to validate.

(There's even an approach that leans on researcher insight; check out our reflexive thematic analysis article.)

The deductive approach focuses your theory from the start, making it faster and more directed than purely exploratory methods. That's what we'll dive into today.

Three core ingredients make this theory-first method of qualitative data analysis successful: codebooks, theoretical framing, and reliability checks.

Deductive thematic analysis relies on an a-priori codebook, a list of themes or codes you decide on before analyzing the data.

It acts as your roadmap.

For example, if you're analyzing support tickets, you might start with categories like "Pricing," "Login Issues," and "Response Time" based on prior research or experience. Each theme in the codebook has a clear definition so everyone on the team understands what to tag.

In practice, you might document these definitions in a shared sheet or whiteboard to keep all analysts aligned.

It's like agreeing on the rules of a game before you start.

Your themes aren't random buckets. They're grounded in an existing theory or framework.

Imagine you're examining customer loyalty feedback using a well-known model like NPS drivers. Your codebook might align with those drivers (product quality, price fairness, service efficiency) to test if the theory holds true in your dataset.

Theory gives your analysis context and purpose from the start.

Deductive thematic analysis emphasizes reliability and consistency.

If multiple people are coding the data, you need to measure how much they agree on applying the codebook. This is where reliability metrics like Cohen's κ (kappa) and Krippendorff's α (alpha) come in.

These are simple statistical measures that show agreement between coders. Like checking if two people would score the same movie the same way, the closer these values are to 1, the more you can trust that your analysis is solid and not just one person's interpretation.

Why bother with these metrics? Because a theory-first approach strives for objectivity (you're testing a hypothesis), the evidence must be dependable.

Modern thematic analysis software helps you maintain objectivity and spot coding discrepancies early.

The bottom line: come prepared with a codebook, ground it in theory, and verify that everyone's coding is in sync.

Busy insights teams can move from data to executive presentation in a single workday.

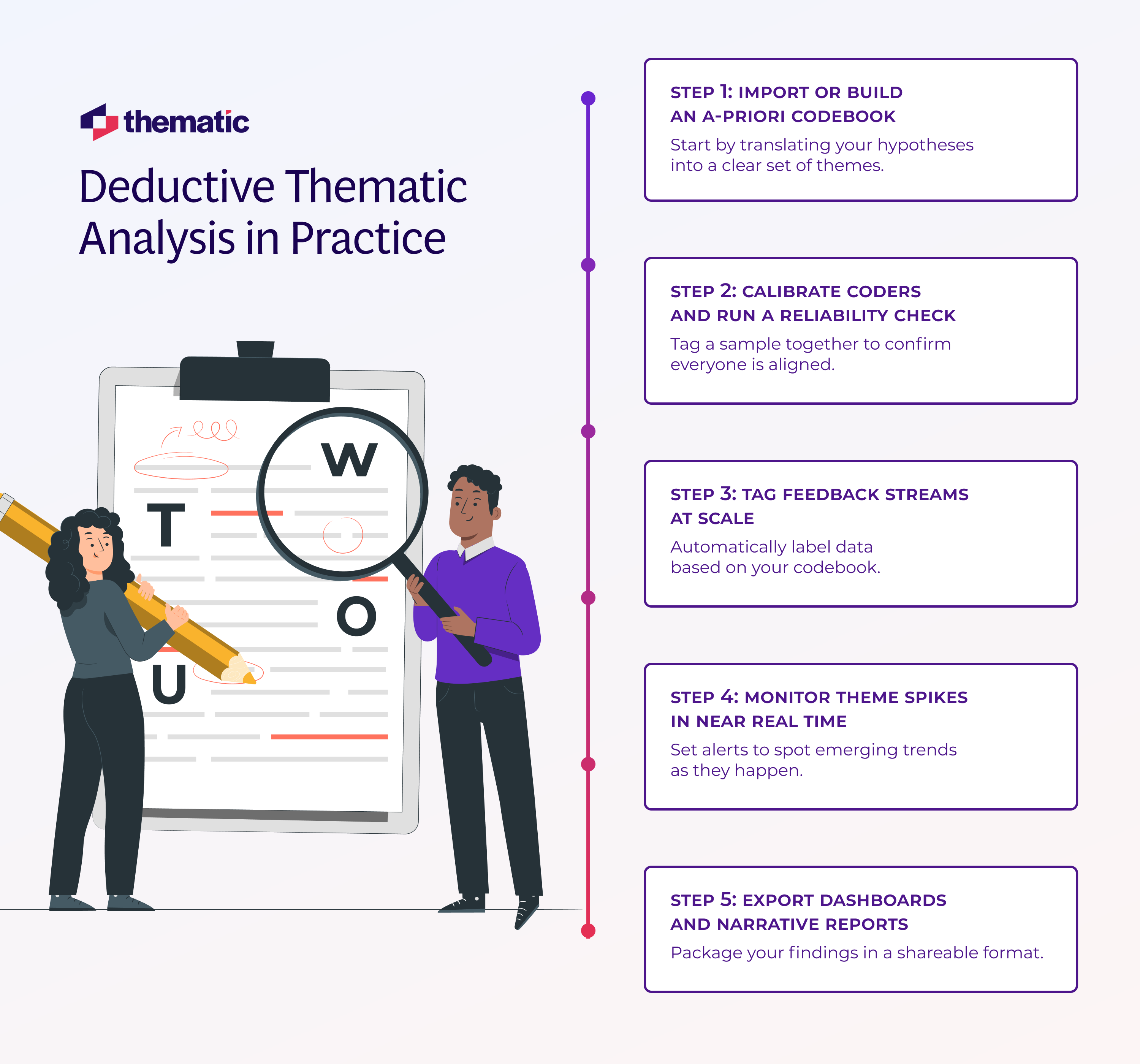

Here's a practical workflow you can adapt whether you use spreadsheets, statistical packages, or a purpose-built platform. Each step keeps your theory-first lens front and center while leaving room for quick course corrections.

Start by translating your hypotheses into a clear set of themes.

Many teams draft the codebook in a shared sheet, then upload it to their analysis software. Give every theme a snappy name, a one-sentence definition, and a few real examples (here's how coding qualitative data works best).

That detail prevents "theme creep" later on.

A retail team, for instance, might load themes like Delivery Time, Product Quality, and Price Fairness. With these themes, every data point has an obvious home. Your analysts aren't guessing where comments belong.

Before you analyze the full dataset, run a small pilot (say, 100 comments) to verify everyone tags consistently.

Have at least two coders label the same sample, then calculate Cohen's κ or Krippendorff's α.

Anything below 0.75 often signals vagueness in your definitions.

A 10-minute sync call can clear up why Delivery Time looked fuzzy and nail down wording. By midday, you'll have tweaked or merged unclear themes and locked a solid codebook.

With the codebook finalized, push it across your entire corpus: support tickets, survey verbatims, app-store reviews.

Modern text-analysis tools can batch-process thousands of lines in minutes. Even a manual pass works if the volume is small.

The key is automation rules: map synonyms (slow shipping, late package) to the same theme so every variation rolls up correctly.

The payoff? Your dashboard now shows how often each theory-driven theme surfaces, without weeks of hand coding.

Thematic automates this step by letting you import codebooks and auto-tag feedback at scale, while maintaining research-grade accuracy through human-in-the-loop validation.

Set thresholds or simple scripts that flag unusual jumps in theme volume or sentiment.

Did Login Issues double overnight, or did sentiment analysis reveal a sharp dip in Onboarding Experience positivity?

A quick alert, whether via email, Slack, or a BI tool, lets you spot anomalies when they're small.

Ask yourself: "Is this a true trend or just noise?"

A 5-minute peek at the raw comments usually reveals the cause.

Finally, package your findings for stakeholders.

Most teams combine a theme-frequency chart, a sentiment trend, and a handful of verbatim quotes for color. Keep visuals simple: bar graphs for volume, line charts for change over time.

Tie every chart back to the original hypothesis so leaders understand the business impact at a glance, especially when prioritizing CX investment using thematic critical feedback points.

By end of day, you'll have a concise deck or live link to share in tomorrow's leadership meeting.

Deductive thematic analysis pays off when you already have a clear hypothesis or regulatory checklist to test.

It falls short when you need open-ended discovery or you're working with tiny, highly nuanced datasets.

The tables below spell out the ideal situations and red-flag scenarios, backed by recent industry and research sources.

Even champions of deductive thematic analysis warn that a theory-first lens has limits. If your codebook is too rigid, you may overlook fresh patterns, inject bias, or struggle with reliability when cases are few or contexts shift quickly.

The bottom line: Use deductive thematic analysis when you need to prove something you already suspect or comply with a fixed standard. Switch to inductive or reflexive approaches when you need to discover what you don't yet know.

A theory-first approach is powerful, but it's not foolproof. Two big pitfalls can lead to missing important new insights.

This is the tendency to see what you expect to see.

If you're convinced that "Pricing" is the only thing customers care about, you might unintentionally overlook other themes popping up in the data. Say you're reviewing comments, laser-focused on price-related feedback. A major complaint about a new product defect could be missed because it wasn't on your predefined list.

How to avoid this: Stay open to the unexpected.

Even with a set codebook, occasionally scan a few responses outside your categories or have a colleague review them. Ask yourself: "Could there be an important theme here that isn't in my codebook?"

If the answer might be yes, take a step back and consider adjusting your framework.

Closely related is the danger of an over-rigid taxonomy.

If your theme definitions are too narrow or you refuse to modify the codebook once analysis starts, you risk forcing every bit of feedback into pre-set boxes that don't quite fit.

Real data can surprise you.

For instance, during a software beta test, users might start talking about "eye strain from the interface"—a topic you never anticipated in your codebook about feature bugs. Losing such emergent insights can be costly.

The solution: Build in hybrid safety nets.

Give yourself permission to add a new theme or refine an existing one when a genuinely new pattern emerges. Thematic's platform supports this flexibility: you can merge similar themes or create a new one on the fly if you spot something novel.

This way, you maintain a deductive structure without becoming blind to what the data is telling you.

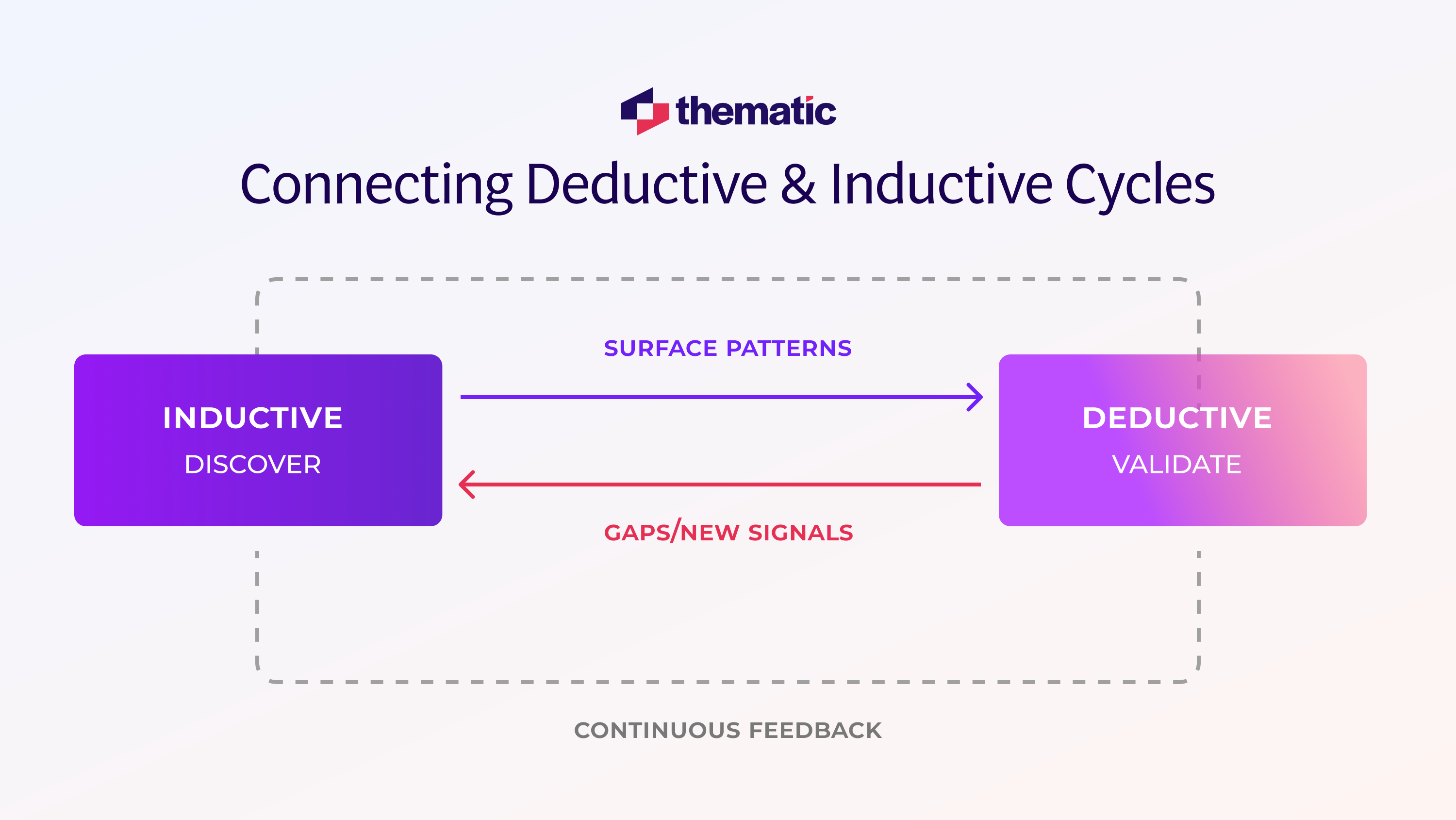

The best insights come from cycling between deductive and inductive approaches, not choosing one forever.

Think of your analysis as a cycle: you might begin with open-minded exploration and then switch to theory-first validation (or vice versa).

Suppose you start with an inductive thematic analysis on a new set of customer comments because you're not sure what's in there.

If you're using an AI-powered tool, the AI combs through and suggests themes based purely on the data. You didn't give it any preconceived categories, so it surfaces organic patterns. Maybe it finds that "Subscription Cancellation" is a major theme you hadn't considered.

Now you've made a discovery.

Next, you take that insight and switch into deductive mode. You formalize "Subscription Issues" as a theme in your codebook (perhaps with sub-themes under it) and apply it to the next wave of incoming feedback.

With this refined codebook, you re-run the analysis (or apply it to next month's data) to verify and quantify how prevalent that theme really is.

In essence, you used induction to find a clue and deduction to confirm and measure it.

The reverse can happen too.

You might begin deductively, for example, using a known framework like Maslow's hierarchy to categorize employee feedback, and then notice that a chunk of comments don't fit any of your pre-set themes.

That's a sign to pivot back to an exploratory mindset.

Maybe you run a quick inductive pass on those "miscellaneous" comments and discover a new theme, say, "Work-from-home challenges," emerging. You can then fold this insight into your deductive codebook and continue.

This agile switching ensures you're not missing emergent topics while still maintaining the efficiency and focus of a theory-driven approach.

Deductive thematic analysis helps you test what matters, fast.

When you start with a clear hypothesis and a solid codebook, your analysis becomes focused, efficient, and easier to act on. But it's not about rigid rules. It’s more of structure with room to adjust.

The smartest teams cycle between deductive and inductive approaches as their data evolves.

With Thematic, you can do both seamlessly. Our platform gives you transparent, research-grade codebook management with human-in-the-loop control. Import your codebook, auto-tag thousands of comments, and maintain auditable records that executives can trust.

So if you already have a theory to validate or themes to monitor, now's the time to put them to the test with speed, clarity, and confidence.

See how Thematic's transparent codebook management simplifies deductive analysis. Book a guided trial.

Deductive thematic analysis uses pre-defined themes based on theory or hypotheses to analyze feedback. You start with a codebook of expected themes, then tag data to test whether your theory holds true. It's faster than exploratory methods when you know what to look for.

Deductive analysis starts with predetermined themes to test a theory; inductive analysis lets themes emerge naturally from the data. Use deductive when validating hypotheses or testing known frameworks. Use inductive for open-ended discovery when you don't know what patterns exist.

Thematic supports deductive analysis by letting teams import ready-made codebooks, auto-tag feedback at scale, and maintain transparent control over theme definitions. Unlike black-box tools, Thematic combines automation with human-in-the-loop validation, making analysis auditable and research-grade.

Start by translating your hypotheses into clear themes. Give each theme a name, one-sentence definition, and 2-3 examples. Ground themes in theory or research frameworks. Document definitions in a shared sheet so all coders understand what to tag. Run a pilot test to verify consistency.

Yes. Thematic automates tagging using your predefined codebook while giving researchers transparent control to edit, merge, or add themes as patterns emerge. The system maintains auditable records of every coding decision, combining speed with research-grade rigor that executives can trust.

Run a pilot with 2+ coders on 100 comments. Calculate Cohen's κ or Krippendorff's α. Aim for 0.75 or higher. If scores are low, clarify theme definitions and re-test. Modern platforms like Thematic flag discrepancies automatically and suggest where definitions need tightening.

Use deductive when testing known theories, validating hypotheses, or checking compliance requirements. Use inductive for exploratory research with no preset framework or when discovering unexpected patterns. Smart teams cycle between both as data evolves. Start inductive to discover, then switch deductive to validate and scale.

Join the newsletter to receive the latest updates in your inbox.

Transforming customer feedback with AI holds immense potential, but many organizations stumble into unexpected challenges.