From Ignored to Indispensable: The Proven, Step-by-Step CX Insights Mastery Blueprint in < 6 Days! Get free access>

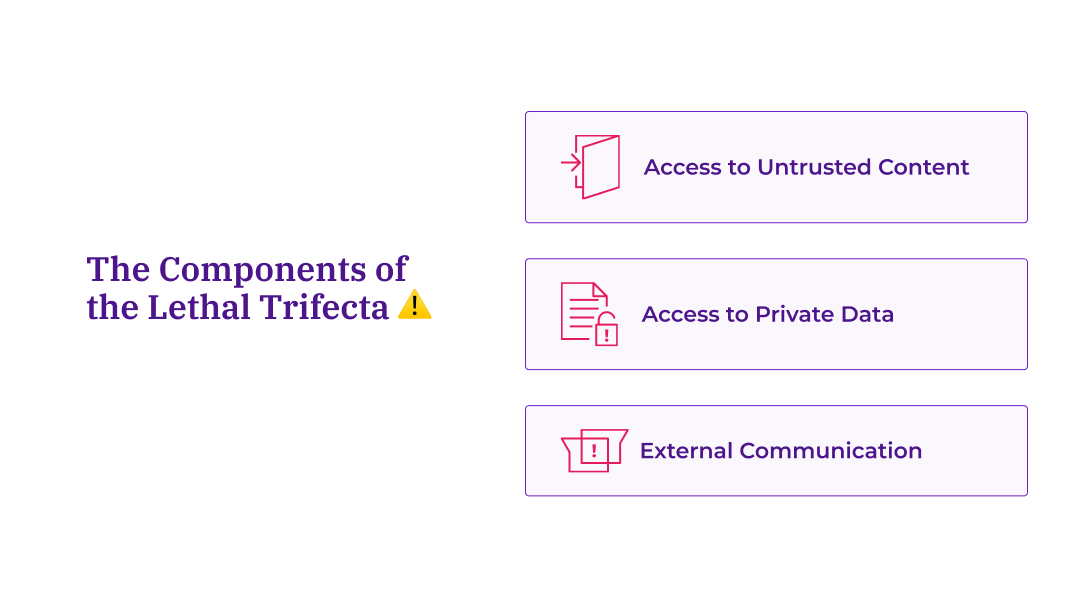

AI agents combining untrusted input, private data access, and external communication create the "Lethal Trifecta" which is a critical security vulnerability.

Most AI agents have a dangerous design flaw: they combine untrusted input, private data access, and external communication: the "Lethal Trifecta."

This makes them vulnerable to prompt-injection attacks.

Thematic eliminates this risk by cutting off external communication: our LLM analyzes data and displays insights, but cannot autonomously communicate externally. Human users control all external actions, ensuring customer data stays secure.

Large Language Models (LLMs) are transforming how we work, but this incredible utility comes with novel and significant security risks. As we integrate AI agents into our most sensitive workflows, we must understand the core design flaw that many systems share—a flaw that cybersecurity expert Simon Willison calls the "Lethal Trifecta."

While security teams understand how to lock down traditional applications, the very nature of an effective AI agent often circumvents these established security models. The "value" of a powerful AI is frequently measured by its ability to execute a dangerous combination of three distinct functions.

The three elements that, when combined in a single AI system, create a serious data breach vulnerability are:

When an AI agent is designed to perform all three of these actions—the Lethal Trifecta—the system is inherently vulnerable to a new class of prompt-injection attacks that can lead to data exfiltration and compromise.

To illustrate the danger, consider these common LLM-powered use cases:

Remote Code Execution (RCE) and data exfiltration are not new attack vectors. However, the combination of LLMs with the Lethal Trifecta introduces two novel, game-changing factors:

Just because you've secured traditional software, doesn't mean you've secured an LLM. New attacks exploit the very nature of language and instruction-following.

If you are evaluating an AI system or designing an agent, you must be able to spot this dangerous pattern. It can be hard to spot in complex workflows, but the main questions to ask are:

This approach is consistent with best practices being developed across the industry. Meta's security guidance for AI agents, often called the "Rule of 2," advocates for the same strategy: ensure you cut off one of the legs. The core message is that if an agent has access to sensitive data, it must be severely restricted in what it can ingest or what it can output to the outside world.

At Thematic, our foundational security principle is to not allow all three components of the Lethal Trifecta in the same system. This requires a deliberate design choice to cut off one of the "legs" of the triangle.

We primarily cut off the External Communication leg, ensuring that our core LLM-powered services cannot act autonomously in external systems.

The LLM-powered system is restricted to merely displaying text to a human user; it cannot post, email, or execute code on its own.

Furthermore, we impose constraints on the AI output itself. Not all AI needs to be able to create or do 'anything'.

We have gates in place where the AI can only create structured data (like JSON objects with enforced structures or simple values), which are then used as inputs to other, highly controlled systems. This deterministic approach allows us to reason about exactly what can be done, eliminating the unpredictability that leads to security flaws.

This entire discussion revolves around the LLM agent itself being the orchestrator of the three legs. It does not mean that your entire platform cannot have these capabilities.

For example, Thematic does have an 'email the answer' button, and we do integrate with data warehouses. The crucial distinction is that the LLM is not aware of these final actions and cannot control them.

A human user must intentionally press the button, or an external rules-based system, which is fully deterministic and auditable, handles the data warehousing logic.

The security risk is present when the LLM is doing all three actions simultaneously and autonomously. By separating the LLM's intelligence from the platform's execution, we ensure that the system remains safe and auditable.

Join the newsletter to receive the latest updates in your inbox.

Transforming customer feedback with AI holds immense potential, but many organizations stumble into unexpected challenges.